By Kaidor - Own work based on File:Earth Global Circulation.jpg from Wikipedia Prevailing Winds article.

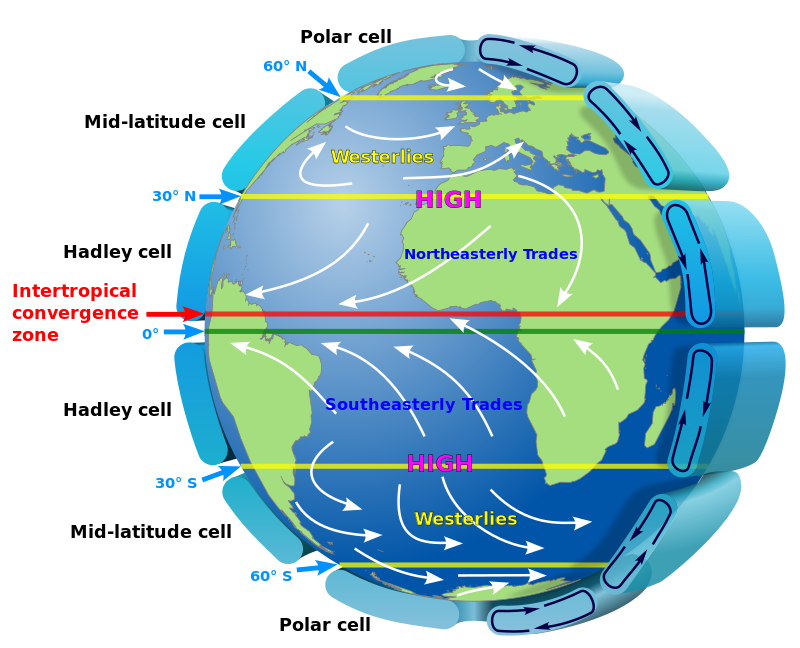

A recent paper, Deep Old Water explains why the Antarctic hasn't warmed, is really a more specific and updated version of Toggwielder's Shifting Westerlies. When the westerly winds shift the temperature of the water transported by Ekman currents changes and you have a larger than many would believe climate impact. Sea Ice extent can also change the amount of transport when it covers or doesn't, colder polar waters than can be transported toward the equator by westerlies or toward the pole by easterlies at the arctic circles. The Antarctic is given most of the press because it has a huge temperature convergence that can change by more than ten degrees in less than ten degrees latitude. The Antarctic winds are also much more stable relative to the Arctic winds which can be changed dramatically by the less stable northern polar vortex.

As I have mentioned before, changes in ocean circulation due to the opening of the Drake Passage and closure of the Panama gap likely resulted in about 3 C degrees of "global" cooling with the NH warming while the SH cooled. That is a pretty large change in "global" temperature caused by the creation of the Antarctic Circumpolar Current and other changes in the ThermoHaline Circulation (THC). Pretty neat stuff that tends to be forgotten in the CO2 done it debate.

The "believers" demand an alternate theory where "your theory is incomplete" should suffice and for some reason the simplified slab model gang cannot seem to grasp that a planet 70% covered with liquid water can have some pretty interesting circulation features with seriously long time scales. That is really odd considering some of their slab models have known currents running opposite of reality. One seriously kick butt ocean model is needed if anyone wants to actually model climate and that appears to be a century or so in our future if ever.

In addition to the Drake Passage and Panama gap, relatively young volcanic island chains in the Pacific can impact ocean currents along with 150 meters or so of sea level variability on glacial time scales.

In any case, it is nice to see some interesting science on the ocean circulation over longer time scales for a change.

New Computer Fund

Tuesday, May 31, 2016

Monday, May 30, 2016

Increase in surface temperature versus increase in CO2

All models have limits but since the believers are familiar with MODTRAN it is kind of fun to use that to mess with their heads.

Assuming that "average" mean surface temperature is meaningful and 288.2 K degrees, if you hold everything else constant but increase surface temperature to 289.2 K degrees, according to MODTRAN there will be a 3.33 Wm-2 change in OLR at 70 km looking down.

This shouldn't be a shock, 3.33 is the "benchmark" for 1 C change in surface temperature and even though the actual surface energy would have increased/decreased by about 5.45 Wm-2, the TOA change would be about 3.33 Wm-2 or about 61% of the change indicated at the surface. While MODTRAN doesn't provide information on changes in latent and convection, the actual increase in surface temperature for 5.45 Wm-2 at some uniform radiant surface would be about 0.80 C degrees. Because of latent, about 88 Wm-2 and convective/sensible, 24 Wm-2, a 390Wm-2 surface would actually be "effectively" a 502 Wm-2 surface below the planetary boundary layer (PBL) and the more purely radiant surface would be above the PBL and at roughly 390 Wm-2. This should not be confused with the Effective Radiant Layer (ERL) which should be emitting roughly 240 Wm-2.

Since the energy into the system is constant, the surface temperature should increase somewhat as the "benchmark" change in OLR at the TOA increases/decreases to restore the energy balance, but that assumes that there is no increase in clouds associated with the increase in surface temperature or increase in convection which both should provide a negative feedback.

Since someone asked what increase I would expect for 700 ppmv CO2, this run shows a decrease of 2.36 Wm-2 which is obviously less than the "benchmark" 3.33 Wm-2 in the first run. If you use the iconic 5.35*ln(700/400) you should get 2.99 Wm-2 decrease. MODTRAN indicates only 78% of expectations. Again, there should be some change at the surface required to restore the imbalance, but you have the latent and sensible issue at the real surface and you have a bit of a struggle defining what "surface" is actually emitting the radiation that is interacting with the increased CO2. You can use a number of assumptions, but twice the change at the surface is likely the maximum you could expect.

Since we are most likely concerned with the "real" surface which should agree with the average global mean surface temperature, there will be less increase in temperature because there will be an increase in latent and sensible heat transfer to above the PBL.

Other than the "believers" that like to quote ancient texts (papers older than 5 years), there is a shift in the "consensus" to a lower sensitivity range but no one can put their finger on why. The simplest explanation is that someone screwed up in their hunt for the absolute worse possible case.

Whatever the case, an increase to 700 ppmv will likely produce less than a degree of warming at the real surface but could produce about 1.5 C degrees of warming or a range of ~0.75 C to 1.50 C degrees.

Assuming that "average" mean surface temperature is meaningful and 288.2 K degrees, if you hold everything else constant but increase surface temperature to 289.2 K degrees, according to MODTRAN there will be a 3.33 Wm-2 change in OLR at 70 km looking down.

This shouldn't be a shock, 3.33 is the "benchmark" for 1 C change in surface temperature and even though the actual surface energy would have increased/decreased by about 5.45 Wm-2, the TOA change would be about 3.33 Wm-2 or about 61% of the change indicated at the surface. While MODTRAN doesn't provide information on changes in latent and convection, the actual increase in surface temperature for 5.45 Wm-2 at some uniform radiant surface would be about 0.80 C degrees. Because of latent, about 88 Wm-2 and convective/sensible, 24 Wm-2, a 390Wm-2 surface would actually be "effectively" a 502 Wm-2 surface below the planetary boundary layer (PBL) and the more purely radiant surface would be above the PBL and at roughly 390 Wm-2. This should not be confused with the Effective Radiant Layer (ERL) which should be emitting roughly 240 Wm-2.

Since the energy into the system is constant, the surface temperature should increase somewhat as the "benchmark" change in OLR at the TOA increases/decreases to restore the energy balance, but that assumes that there is no increase in clouds associated with the increase in surface temperature or increase in convection which both should provide a negative feedback.

Since someone asked what increase I would expect for 700 ppmv CO2, this run shows a decrease of 2.36 Wm-2 which is obviously less than the "benchmark" 3.33 Wm-2 in the first run. If you use the iconic 5.35*ln(700/400) you should get 2.99 Wm-2 decrease. MODTRAN indicates only 78% of expectations. Again, there should be some change at the surface required to restore the imbalance, but you have the latent and sensible issue at the real surface and you have a bit of a struggle defining what "surface" is actually emitting the radiation that is interacting with the increased CO2. You can use a number of assumptions, but twice the change at the surface is likely the maximum you could expect.

Since we are most likely concerned with the "real" surface which should agree with the average global mean surface temperature, there will be less increase in temperature because there will be an increase in latent and sensible heat transfer to above the PBL.

Other than the "believers" that like to quote ancient texts (papers older than 5 years), there is a shift in the "consensus" to a lower sensitivity range but no one can put their finger on why. The simplest explanation is that someone screwed up in their hunt for the absolute worse possible case.

Whatever the case, an increase to 700 ppmv will likely produce less than a degree of warming at the real surface but could produce about 1.5 C degrees of warming or a range of ~0.75 C to 1.50 C degrees.

Sunday, May 29, 2016

More "Real Climate" science

"When you release a slug of new CO2 into the atmosphere, dissolution in the ocean gets rid of about three quarters of it, more or less, depending on how much is released. The rest has to await neutralization by reaction with CaCO3 or igneous rocks on land and in the ocean [2-6]. These rock reactions also restore the pH of the ocean from the CO2 acid spike. My model indicates that about 7% of carbon released today will still be in the atmosphere in 100,000 years [7]. I calculate a mean lifetime, from the sum of all the processes, of about 30,000 years. That’s a deceptive number, because it is so strongly influenced by the immense longevity of that long tail. If one is forced to simplify reality into a single number for popular discussion, several hundred years is a sensible number to choose, because it tells three-quarters of the story, and the part of the story which applies to our own lifetimes."

"My model told me," this is going to take 100,000 years. 2005 was part of what I consider the peak of climate disaster sales. While there is some debate over how long it will take top get back to "normal" and still some debate on how normal "normal" might be, the catchy "forever and ever" and numbers like 100,000 years stick with people like the Jello jingle.

To get to the 100,000 year number you pick the slowest process you can find and set everything else to "remaining equal". This way you can "scientifically" come up with some outrageous claim for your product that is "plausible" at least as long as you make "all else remain equal".

This method was also useful for coal fired power plants. You pick emissions for some era then assume "all else remains equal" and you can get a motivational value to inspire political action. Back in 2005, 10% of the US coal fired power plants produced 50% of the "harmful" emissions. Business as usual in the coal biz was building more efficient coal plants to meet Clean Air Act standards and since the dirtiest plants were the oldest plants they would be replaced by the cleanest new plants. If you replace an old 30% efficient 1950s-60s era plant with a 45% efficient "state of the art" plant, CO2 emissions would be reduced by 50% along with all of the other real pollutants that need to be scrubbed in accordance with the CAA.

By 2015, many had caught on to the sales game so the tactic changed to "fast mitigation". Fast mitigation is just business as usual with new name and logo. Because of regulations and threats of regulation, the CAA was replaced with the clean house threats so upgrades were placed on hold while the lawyers made some cash. Between roughly 2005 and 2015, just about every technical innovation that could improve air quality while maintaining reasonable energy supply and cost, was placed on hold by the study, litigation, study, regulate, litigate, study process. Peak fossil fuel energy costs hit in the 2011 time frame, just like the "necessarily more expensive" game plan predicted. The progressive hard line, bolstered by fantasy "science" assuming "all else remains equal" had to give way to returning to normal with new packaging, "fast mitigation."

Business as usual has never been about maintaining the status quo, it is about keeping up with the competition and surpassing them when possible. Cleaner, more efficient, better value, more bells and whistles is business as usual. "Fast mitigation" is just a return to business as usual. Now if the "scientific pitchmen" will get out of the way, the future might look bright again.

"My model told me," this is going to take 100,000 years. 2005 was part of what I consider the peak of climate disaster sales. While there is some debate over how long it will take top get back to "normal" and still some debate on how normal "normal" might be, the catchy "forever and ever" and numbers like 100,000 years stick with people like the Jello jingle.

To get to the 100,000 year number you pick the slowest process you can find and set everything else to "remaining equal". This way you can "scientifically" come up with some outrageous claim for your product that is "plausible" at least as long as you make "all else remain equal".

This method was also useful for coal fired power plants. You pick emissions for some era then assume "all else remains equal" and you can get a motivational value to inspire political action. Back in 2005, 10% of the US coal fired power plants produced 50% of the "harmful" emissions. Business as usual in the coal biz was building more efficient coal plants to meet Clean Air Act standards and since the dirtiest plants were the oldest plants they would be replaced by the cleanest new plants. If you replace an old 30% efficient 1950s-60s era plant with a 45% efficient "state of the art" plant, CO2 emissions would be reduced by 50% along with all of the other real pollutants that need to be scrubbed in accordance with the CAA.

By 2015, many had caught on to the sales game so the tactic changed to "fast mitigation". Fast mitigation is just business as usual with new name and logo. Because of regulations and threats of regulation, the CAA was replaced with the clean house threats so upgrades were placed on hold while the lawyers made some cash. Between roughly 2005 and 2015, just about every technical innovation that could improve air quality while maintaining reasonable energy supply and cost, was placed on hold by the study, litigation, study, regulate, litigate, study process. Peak fossil fuel energy costs hit in the 2011 time frame, just like the "necessarily more expensive" game plan predicted. The progressive hard line, bolstered by fantasy "science" assuming "all else remains equal" had to give way to returning to normal with new packaging, "fast mitigation."

Business as usual has never been about maintaining the status quo, it is about keeping up with the competition and surpassing them when possible. Cleaner, more efficient, better value, more bells and whistles is business as usual. "Fast mitigation" is just a return to business as usual. Now if the "scientific pitchmen" will get out of the way, the future might look bright again.

Friday, May 27, 2016

Once in a while it is fun to go back through some of the realclimate.org posts just for a laugh

Once in a while it is fun to go back through some of the realclimate.org posts just for a laugh. My favors are the Antarctic warming, not warming, warming posts when it is pretty obvious looking at the MODTRAN model of forcings that the Antarctic should be doing either nothing or cooling due to radiant physics. It just took them a few years to figure out that any changes in the Antarctic are due to "waves" i.e. changes in ocean and atmospheric circulation. Changes in atmospheric and oceans circulations on various time scales can be just a touch difficult to cipher, you could say they are somewhat chaotic.

Other than the extremely basic physics, there isn't much you can say one way or another and even the basic physics involve fairly large simplifications/assumptions. "These calculations can be condensed into simplified fits to the data, such as the oft-used formula for CO2: RF = 5.35 ln(CO2/CO2_orig) (see Table 6.2 in IPCC TAR for the others). The logarithmic form comes from the fact that some particular lines are already saturated and that the increase in forcing depends on the ‘wings’ (see this post for more details). Forcings for lower concentration gases (such as CFCs) are linear in concentration. The calculations in Myhre et al use representative profiles for different latitudes, but different assumptions about clouds, their properties and the spatial heterogeneity mean that the global mean forcing is uncertain by about 10%. Thus the RF for a doubling of CO2 is likely 3.7±0.4 W/m2 – the same order of magnitude as an increase of solar forcing by 2%."

That whole paragraph is a clickable link to a 2007 post at RC. The constant 5.35 is a curve fit to available data which tries to "fit" surface temperature forcing to that elusive Effective Radiant Layer (ERL) forcing and assumes a perfect black body response by both so that the temperature of one is directly related to the other. For absolutely childlike simplification, there is nothing wrong with this but I believe we have moved beyond that stage finally, hopefully.

Note in the quoted paragraph the "uncertain by about 10%" fairy tale. 30% skewed high is a better guess and that assumes that the "surface" is actually some relevant surface on this particular planet. Thanks to the hiatus that sometimes does and sometimes doesn't exist, someone finally noticed that the "surface" used in climate models doesn't match the "surface" being modeled for global mean surface temperature anomaly which doesn't have any agreed upon temperature for there to be an ideal black body heat emission from, to interact with the elusive ERL. Since another crew actually spent nearly ten years measuring CO2 forcing only to find it to be considerably less than the iconic 5.35 etc.by about 27 percent, I would expect just a bit more "back to basics" work so that the typical made for kindergarten simplifications provided to the masses reflect the huge expansion of collective climate knowledge. Instead you have Bill Nye, the not so up to date science guy, peddling the same old same old though I have to admit even he is getting some heat from the "causers".

Try to remember that the highest range of impact assumed a 3 times amplification of the basic CO2 enhancement and when you find that the CO2 enhancement is about 30% less than expected, the amplification would also be considerably less, "all else remaining equal."

That RC post also uses the disc in space input power simplification which ignores the physical properties of the water portion (about 65% of the globe if you allow for critical angles) of the "surface" which requires a bit more specific estimate of "average" power. Once again, a fine "simplification" for the K-9 set, but not up to snuff for serious discussion. That issue has one paper so far but will have a few more.

Other than the extremely basic physics, there isn't much you can say one way or another and even the basic physics involve fairly large simplifications/assumptions. "These calculations can be condensed into simplified fits to the data, such as the oft-used formula for CO2: RF = 5.35 ln(CO2/CO2_orig) (see Table 6.2 in IPCC TAR for the others). The logarithmic form comes from the fact that some particular lines are already saturated and that the increase in forcing depends on the ‘wings’ (see this post for more details). Forcings for lower concentration gases (such as CFCs) are linear in concentration. The calculations in Myhre et al use representative profiles for different latitudes, but different assumptions about clouds, their properties and the spatial heterogeneity mean that the global mean forcing is uncertain by about 10%. Thus the RF for a doubling of CO2 is likely 3.7±0.4 W/m2 – the same order of magnitude as an increase of solar forcing by 2%."

That whole paragraph is a clickable link to a 2007 post at RC. The constant 5.35 is a curve fit to available data which tries to "fit" surface temperature forcing to that elusive Effective Radiant Layer (ERL) forcing and assumes a perfect black body response by both so that the temperature of one is directly related to the other. For absolutely childlike simplification, there is nothing wrong with this but I believe we have moved beyond that stage finally, hopefully.

Note in the quoted paragraph the "uncertain by about 10%" fairy tale. 30% skewed high is a better guess and that assumes that the "surface" is actually some relevant surface on this particular planet. Thanks to the hiatus that sometimes does and sometimes doesn't exist, someone finally noticed that the "surface" used in climate models doesn't match the "surface" being modeled for global mean surface temperature anomaly which doesn't have any agreed upon temperature for there to be an ideal black body heat emission from, to interact with the elusive ERL. Since another crew actually spent nearly ten years measuring CO2 forcing only to find it to be considerably less than the iconic 5.35 etc.by about 27 percent, I would expect just a bit more "back to basics" work so that the typical made for kindergarten simplifications provided to the masses reflect the huge expansion of collective climate knowledge. Instead you have Bill Nye, the not so up to date science guy, peddling the same old same old though I have to admit even he is getting some heat from the "causers".

Try to remember that the highest range of impact assumed a 3 times amplification of the basic CO2 enhancement and when you find that the CO2 enhancement is about 30% less than expected, the amplification would also be considerably less, "all else remaining equal."

That RC post also uses the disc in space input power simplification which ignores the physical properties of the water portion (about 65% of the globe if you allow for critical angles) of the "surface" which requires a bit more specific estimate of "average" power. Once again, a fine "simplification" for the K-9 set, but not up to snuff for serious discussion. That issue has one paper so far but will have a few more.

Wednesday, May 18, 2016

A Little More on Teleconnection

The Bates 2016/2014 issue with simplifying estimates of climate sensitivity by reduction to tropical regions has some profound impacts. If you have followed my rants, the tropical oceans and oceans in general are my main focus. The tropical oceans are like the fire box of the heat engine and the poles are a large part of the heat sink. Space is of course the ultimate heat sink, but since there is a large amount of energy advection toward the poles, average global surface temperature depends on how well or efficiently energy moves horizontally as vertically. Since the atmosphere will adjust to about the exact same outward energy flow over time as required to meet the energy in equals energy out requirement with some small "imbalance" that might persist for centuries, the oceans which provide that energy and are capable of storing energy related to the "imbalance" so if you "get" the oceans you will have "gotten" most of the problem. The correlation of the oceans with sensitivity and "imbalance" just provides an estimate of how much can be "got".

Since Bates 2016 uses a smaller "tropics" than most, 20S-20N, instead of the standard ~24S-24N, I have re-plotted the correlation of the Bates tropics with global oceans for both the new ERSSTv4 and the old standard HADSST. Both have a correlation of ~85% so if you use the Bates tropics as a proxy for global oceans you should "get" 85% of the information with a 3% to 6% variation that depends on which time frame you choose. If you happen to be a fan of paleo-ocean studies, you could expect up to that same correlation provided you do an excellent job building your tropical ocean reconstruction. If you are a paleo-land fan, a perfect land temperature reconstruction would give you a correlation of about 23% with the remaining 70% of the globe. That is because land is "noisy" thanks to somewhat random circulation patterns.

Outgoing Long Wave Radiation (OLR) is also noisy, but thanks to interpolation methods and lots of statistical modeling, OLR is the best indication of the "imbalance". The Bates tropical OLR using NOAA data has a 68% correlation with "global" OLR which means it is about twice as useful as the noisy land surface temperature if you are looking for a "global" proxy.

Interpolation methods used for SST and OLR will tend to enhance the "global" correlation so there is some additional uncertainty, but for government work, SST and OLR are pretty much the best of your choices.

"Believers" are adamant about using "all" the land surface temperature data, including infilling with synthetic data, if you are going to get a "reasonable" estimate of climate sensitivity with "reasonable" meaning high and highly uncertain. Basic thermodynamics though allows the use of several references, each with some flaws, but none "useless". If you can only get the answer you are looking for with one particular reference, you are not doing a very good job of checking your work. Perpetual motion discovery is generally a product of not checking your work very well. Believers demanding that certain data be included and only certain frames of reference be used is a bit like what you would expect from magicians and con artists.

In the first chart I used 1880 to 1899 as the "preindustrial" baseline thanks to Gavin Schmidt. There is about 4.5 billions years worth of "preindustrial" and since the data accuracy suffers with time, the uncertainty in Gavin's choice of "preindustrial" is on the order of half a degree which is about 50% of the warming.

The true master of teleconnection abuse would be Michael E. Mann. The 1000 years of global warming plot he has produced is based on primarily tree ring and land based temperature proxies. So if he gets a perfect replication of past land temperatures based on the correlation of land versus ocean instrumental data, he would at most "get" about 23% correlation with 70% of the global "surface" temperature. The Oppo et al. 2009 overlay on the other hand could get 85% correlation with that 70% of the global surface if they did a perfect job, so their work should be given more "weight" than Mann's. If you can eyeball to 1880 to 1899 baseline on the chart you can see there is a full 1C of uncertainty in what "preindustrial" should be. In case you are wondering, the Indian Ocean Warm Pool region has about 75% correlation with "global" oceans, so IPWP isn't "perfect" but it is much better than alpine trees.

The whole object of using "teleconnections" is to find the best correlation with "global" and to use relative correlations to estimate uncertainty. This is what Bates 2016 has done. He limited his analysis to the region with the "best" data that represents the most energy and based his uncertainty range on the estimated correlation of his region of choice with "global". Lots of caveats, but Bates has fewer than Mann and the greater than 3C sensitivity proponents.

So the debate will continue, but when "believers" resort to antagonistic tactics to discredit quite reasonable analysis they should expect "hoax" claims since they are really using con artist tactics whether they know it or not.

Update:

Even the weak 23% correlation between tropical SST and the global surface temperature is "significant" when the number of points used is large. With a bit of smoothing to reduce noise though you can get an eyeball correlation and thanks to Gavin's baseline you can see that the northern hemisphere extra tropical region is the odd region out. If you recall, Mann's "global" reconstruction was really a northern hemisphere ~20N to 90N reconstruction with a few southern hemisphere locations kind of tossed in after the fact. That 20N-90N area is about 33% of the globe and happens to be about the noisiest 33% thanks to lower specific heat capacity. Understandably people are concerned with climate change in the northern extra tropical region to the point they are biased to that region, but an energy balance model just happens to focus on energy not real estate bias.

If you are a fan of pseudo-cycles you probably notice that the 20N-90N regional temperature looks a lot like the Atlantic Multi-decadal Oscillation (AMO). There is likely some CO2 related amplification of the pseudo-cycle along with land mass amplification due to lower specific heat capacity, but that pesky 0.5 C degree wandering would make it hard to determine what is caused by what. For the other 67% of the global, land and ocean included, the Bates 20S-20N tropics serves as a reasonable "proxy", "index" or "teleconnection" depending on your choice of terms.

Since Bates 2016 uses a smaller "tropics" than most, 20S-20N, instead of the standard ~24S-24N, I have re-plotted the correlation of the Bates tropics with global oceans for both the new ERSSTv4 and the old standard HADSST. Both have a correlation of ~85% so if you use the Bates tropics as a proxy for global oceans you should "get" 85% of the information with a 3% to 6% variation that depends on which time frame you choose. If you happen to be a fan of paleo-ocean studies, you could expect up to that same correlation provided you do an excellent job building your tropical ocean reconstruction. If you are a paleo-land fan, a perfect land temperature reconstruction would give you a correlation of about 23% with the remaining 70% of the globe. That is because land is "noisy" thanks to somewhat random circulation patterns.

Outgoing Long Wave Radiation (OLR) is also noisy, but thanks to interpolation methods and lots of statistical modeling, OLR is the best indication of the "imbalance". The Bates tropical OLR using NOAA data has a 68% correlation with "global" OLR which means it is about twice as useful as the noisy land surface temperature if you are looking for a "global" proxy.

Interpolation methods used for SST and OLR will tend to enhance the "global" correlation so there is some additional uncertainty, but for government work, SST and OLR are pretty much the best of your choices.

"Believers" are adamant about using "all" the land surface temperature data, including infilling with synthetic data, if you are going to get a "reasonable" estimate of climate sensitivity with "reasonable" meaning high and highly uncertain. Basic thermodynamics though allows the use of several references, each with some flaws, but none "useless". If you can only get the answer you are looking for with one particular reference, you are not doing a very good job of checking your work. Perpetual motion discovery is generally a product of not checking your work very well. Believers demanding that certain data be included and only certain frames of reference be used is a bit like what you would expect from magicians and con artists.

In the first chart I used 1880 to 1899 as the "preindustrial" baseline thanks to Gavin Schmidt. There is about 4.5 billions years worth of "preindustrial" and since the data accuracy suffers with time, the uncertainty in Gavin's choice of "preindustrial" is on the order of half a degree which is about 50% of the warming.

The true master of teleconnection abuse would be Michael E. Mann. The 1000 years of global warming plot he has produced is based on primarily tree ring and land based temperature proxies. So if he gets a perfect replication of past land temperatures based on the correlation of land versus ocean instrumental data, he would at most "get" about 23% correlation with 70% of the global "surface" temperature. The Oppo et al. 2009 overlay on the other hand could get 85% correlation with that 70% of the global surface if they did a perfect job, so their work should be given more "weight" than Mann's. If you can eyeball to 1880 to 1899 baseline on the chart you can see there is a full 1C of uncertainty in what "preindustrial" should be. In case you are wondering, the Indian Ocean Warm Pool region has about 75% correlation with "global" oceans, so IPWP isn't "perfect" but it is much better than alpine trees.

The whole object of using "teleconnections" is to find the best correlation with "global" and to use relative correlations to estimate uncertainty. This is what Bates 2016 has done. He limited his analysis to the region with the "best" data that represents the most energy and based his uncertainty range on the estimated correlation of his region of choice with "global". Lots of caveats, but Bates has fewer than Mann and the greater than 3C sensitivity proponents.

So the debate will continue, but when "believers" resort to antagonistic tactics to discredit quite reasonable analysis they should expect "hoax" claims since they are really using con artist tactics whether they know it or not.

Update:

Even the weak 23% correlation between tropical SST and the global surface temperature is "significant" when the number of points used is large. With a bit of smoothing to reduce noise though you can get an eyeball correlation and thanks to Gavin's baseline you can see that the northern hemisphere extra tropical region is the odd region out. If you recall, Mann's "global" reconstruction was really a northern hemisphere ~20N to 90N reconstruction with a few southern hemisphere locations kind of tossed in after the fact. That 20N-90N area is about 33% of the globe and happens to be about the noisiest 33% thanks to lower specific heat capacity. Understandably people are concerned with climate change in the northern extra tropical region to the point they are biased to that region, but an energy balance model just happens to focus on energy not real estate bias.

If you are a fan of pseudo-cycles you probably notice that the 20N-90N regional temperature looks a lot like the Atlantic Multi-decadal Oscillation (AMO). There is likely some CO2 related amplification of the pseudo-cycle along with land mass amplification due to lower specific heat capacity, but that pesky 0.5 C degree wandering would make it hard to determine what is caused by what. For the other 67% of the global, land and ocean included, the Bates 20S-20N tropics serves as a reasonable "proxy", "index" or "teleconnection" depending on your choice of terms.

Sunday, May 15, 2016

Does it Teleconnect or not?

While the progress of climate science blazes along at its usual snail's pace it is pretty hard to find any real science worth discussing. The political and "Nobel (noble) Cause" side of things has also been done to death. Once and a while though something interesting pops up :)

Professor J. Ray Bates has published a new paper on lower estimates of climate sensitivity, Bates 2016, which appears to be an update of his 2014 paper that was supposedly "debunked" by Andrew "Balloons" Dessler. My Balloons/Balloonacy/Balloonatic nicknames for prof Dessler are based on his valiant attempt to prove that there was tropical tropospheric warming by using the rise and drift rates of radiosonde balloons instead of the on board temperature sensing equipment. Andy did one remarkable job of finding what he wanted in a sea of noisy nonsense. Andy seems to like noisy and is a bit noisy himself.

The issue raise by Balloonacy was that Bates used data produced by Lindzen and Choi 2011 (LC11) which was used to support the Lindzen "Iris" theory which is basically that increased water vapor should tend to cause more surface radiant heat loss because water vapor tends to behave a bit contrary to the expectation of climate modelers. Since LC11 was concerned only with the tropics they used data restricted to the tropics. Bates proposed that the tropics are a pretty good proxy for global and using the tropics produced a lower estimate of climate sensitivity and that thanks to better satellite data, the uncertainty in the estimates was much smaller than "global" estimates.

The issue raise by Balloonacy was that Bates used data produced by Lindzen and Choi 2011 (LC11) which was used to support the Lindzen "Iris" theory which is basically that increased water vapor should tend to cause more surface radiant heat loss because water vapor tends to behave a bit contrary to the expectation of climate modelers. Since LC11 was concerned only with the tropics they used data restricted to the tropics. Bates proposed that the tropics are a pretty good proxy for global and using the tropics produced a lower estimate of climate sensitivity and that thanks to better satellite data, the uncertainty in the estimates was much smaller than "global" estimates.

This chart of Outgoing Longwave Radiation (OLR) tends to support Bates. The "global" ORL interpolation by NOAA has a 68% correlation with 20S-20N and an 80% correlation with 30S-30N. If you consider that the 30S-30N band represents about 50% of the surface and about 75% of the surface energy, this "high" correlation for climate science at least makes perfectly good sense if you happen to be using energy balance models. Balloonacy models not so much, but for energy related modeling using the majority of the available energy is pretty standard.

What Bates did with his latest was use two models, Model A with the 20S-20N "tropics" and model B which used the "extra-tropical" region or everything other than 20S-20N, to try and illustration to Balloonacy that majority energy regions in energy balance models tend to rule the roost.

The Ballonatics btw have no problem "discovering" Teleconnections should the teleconnects "prove" their point, but seem to be a bit baffled by reasons that certain teleconnections might be more "robust" than others.

Unfortunately, data massaging (spell check recommended massacring which might be a better choice) methods can tend to impact the validity of teleconnect correlations. "Interpolation" required to create "global" data sets tend to smear regions which artificial increases calculated correlations. So no matter how much you try to determine error ranges, there is likely some amount of unknown or unknowable uncertainty that is a product of natural and man made "smoothing". Nit picking someone else's difficulties with uncertain after you have pushed every limit to "find" your result is a bit comical.

The general "follow the tropics", in particular the tropical warm pools is growing in popularity with the younger set of climate scientists just like follow the money and follow the energy are popular if you want to simply rather complex problems. Looking for answers in the noisiest and most uncertain data is a bit like P-Hacking which is popular with the published more than 200 paper set. Real science should take a bit of time I imagine.

In any case, Professor Bates has reaffirmed his low estimate of climate sensitivity which will either prove somewhat right or not over the next decade or so. Stay tuned :)

Here is the link to J. Ray Bates' paper. http://onlinelibrary.wiley.com/doi/10.1002/2015EA000154/epdf

Professor J. Ray Bates has published a new paper on lower estimates of climate sensitivity, Bates 2016, which appears to be an update of his 2014 paper that was supposedly "debunked" by Andrew "Balloons" Dessler. My Balloons/Balloonacy/Balloonatic nicknames for prof Dessler are based on his valiant attempt to prove that there was tropical tropospheric warming by using the rise and drift rates of radiosonde balloons instead of the on board temperature sensing equipment. Andy did one remarkable job of finding what he wanted in a sea of noisy nonsense. Andy seems to like noisy and is a bit noisy himself.

The issue raise by Balloonacy was that Bates used data produced by Lindzen and Choi 2011 (LC11) which was used to support the Lindzen "Iris" theory which is basically that increased water vapor should tend to cause more surface radiant heat loss because water vapor tends to behave a bit contrary to the expectation of climate modelers. Since LC11 was concerned only with the tropics they used data restricted to the tropics. Bates proposed that the tropics are a pretty good proxy for global and using the tropics produced a lower estimate of climate sensitivity and that thanks to better satellite data, the uncertainty in the estimates was much smaller than "global" estimates.

The issue raise by Balloonacy was that Bates used data produced by Lindzen and Choi 2011 (LC11) which was used to support the Lindzen "Iris" theory which is basically that increased water vapor should tend to cause more surface radiant heat loss because water vapor tends to behave a bit contrary to the expectation of climate modelers. Since LC11 was concerned only with the tropics they used data restricted to the tropics. Bates proposed that the tropics are a pretty good proxy for global and using the tropics produced a lower estimate of climate sensitivity and that thanks to better satellite data, the uncertainty in the estimates was much smaller than "global" estimates.This chart of Outgoing Longwave Radiation (OLR) tends to support Bates. The "global" ORL interpolation by NOAA has a 68% correlation with 20S-20N and an 80% correlation with 30S-30N. If you consider that the 30S-30N band represents about 50% of the surface and about 75% of the surface energy, this "high" correlation for climate science at least makes perfectly good sense if you happen to be using energy balance models. Balloonacy models not so much, but for energy related modeling using the majority of the available energy is pretty standard.

What Bates did with his latest was use two models, Model A with the 20S-20N "tropics" and model B which used the "extra-tropical" region or everything other than 20S-20N, to try and illustration to Balloonacy that majority energy regions in energy balance models tend to rule the roost.

The Ballonatics btw have no problem "discovering" Teleconnections should the teleconnects "prove" their point, but seem to be a bit baffled by reasons that certain teleconnections might be more "robust" than others.

Unfortunately, data massaging (spell check recommended massacring which might be a better choice) methods can tend to impact the validity of teleconnect correlations. "Interpolation" required to create "global" data sets tend to smear regions which artificial increases calculated correlations. So no matter how much you try to determine error ranges, there is likely some amount of unknown or unknowable uncertainty that is a product of natural and man made "smoothing". Nit picking someone else's difficulties with uncertain after you have pushed every limit to "find" your result is a bit comical.

The general "follow the tropics", in particular the tropical warm pools is growing in popularity with the younger set of climate scientists just like follow the money and follow the energy are popular if you want to simply rather complex problems. Looking for answers in the noisiest and most uncertain data is a bit like P-Hacking which is popular with the published more than 200 paper set. Real science should take a bit of time I imagine.

In any case, Professor Bates has reaffirmed his low estimate of climate sensitivity which will either prove somewhat right or not over the next decade or so. Stay tuned :)

Here is the link to J. Ray Bates' paper. http://onlinelibrary.wiley.com/doi/10.1002/2015EA000154/epdf

Subscribe to:

Comments (Atom)