Final? Update: I Was asked a question about whether the Carnot efficiency could be used to determine a range of surface temperatures for Earth. While Carnot has limits, it can provide a lot of information, so I set up this Carnot Divider simple model.

The Carnot Divider is just a simple use of the Carnot efficiency to establish or define a frame of reference common to both the oceans and atmosphere. Since thermodynamics allows a great deal of flexibility when selecting a frame of reference, this is defining my choice, not a standard frame of reference. You can determine if it has merit or not, but since many did not understand my use of a moist air boundary envelope, this may help explain why it may be useful.

The rules are pretty simple, given that energy in has to equal energy out of a stable open system, the sum of the work done on the ocean "system" and atmospheric "system" must equal 50% of the energy available. Work in this case is heating. System is in quotes because I am defining two separate but coupled systems.

For the Oceans system Tc(oceans) is the approximate minimum temperature or the freezing point of salt water with 35g/kg salinity. That is ~ minus 1.9 C degrees. Tc(turbo) is the approximate temperature of the Turbopause, -89.2 C degrees. Th is the maximum SST that produce the Work, W(oceans) plus W(turbo) equal 50% of the total work potential. Since Carnot efficiency is based solely on the temperatures of source and sink, no area adjustments are required,

no shape is required, no TSI is required, no rotational rate is required, nada, nothing but Ein=Eout, -1.9 C is the freezing point of salt water and the Turbopause temperature is about -89.2 C degrees.

|

Ocean Sink |

Turbo Sink |

Ocean Area |

Land Area |

Turbo Area |

|

|

| C degrees |

-1.9000 |

-89.1500 |

362.0000 |

148.0000 |

525.3000 |

|

|

| K Degrees |

271.2500 |

184.0000 |

287.3750 |

285.6000 |

|

|

|

| Wm-2 (eff) |

306.9460 |

64.9912 |

386.7043 |

377.2384 |

|

|

|

|

Aqua World |

K degree |

C degree |

|

Real World |

K degree |

C degree |

|

Ocean Surf |

287.3750 |

14.2250 |

|

Land Surf. |

285.6000 |

12.4500 |

|

Atmosphere |

243.7500 |

-29.4000 |

|

SST Max |

303.5000 |

30.3500 |

| SST Max |

Ocean eff |

Turbo eff |

Combined eff |

LST |

Land eff |

Turbo eff |

Ocean in |

| 300.0000 |

0.0958 |

0.3867 |

0.4825 |

282.1000 |

0.5202 |

0.3477 |

0.1725 |

| 300.5000 |

0.0973 |

0.3877 |

0.4850 |

282.6000 |

0.5173 |

0.3489 |

0.1684 |

| 301.0000 |

0.0988 |

0.3887 |

0.4875 |

283.1000 |

0.5145 |

0.3501 |

0.1644 |

| 301.5000 |

0.1003 |

0.3897 |

0.4900 |

283.6000 |

0.5116 |

0.3512 |

0.1604 |

| 302.0000 |

0.1018 |

0.3907 |

0.4925 |

284.1000 |

0.5087 |

0.3523 |

0.1563 |

| 302.5000 |

0.1033 |

0.3917 |

0.4950 |

284.6000 |

0.5058 |

0.3535 |

0.1523 |

| 303.0000 |

0.1048 |

0.3927 |

0.4975 |

285.1000 |

0.5029 |

0.3546 |

0.1483 |

| 303.5000 |

0.1063 |

0.3937 |

0.5000 |

285.6000 |

0.5000 |

0.3557 |

0.1443 |

| 304.0000 |

0.1077 |

0.3947 |

0.5025 |

286.1000 |

0.4971 |

0.3569 |

0.1402 |

| 304.5000 |

0.1092 |

0.3957 |

0.5049 |

286.6000 |

0.4942 |

0.3580 |

0.1362 |

| 305.0000 |

0.1107 |

0.3967 |

0.5074 |

287.1000 |

0.4913 |

0.3591 |

0.1322 |

| 305.5000 |

0.1121 |

0.3977 |

0.5098 |

287.6000 |

0.4884 |

0.3602 |

0.1281 |

| 306.0000 |

0.1136 |

0.3987 |

0.5123 |

288.1000 |

0.4854 |

0.3613 |

0.1241 |

| 306.5000 |

0.1150 |

0.3997 |

0.5147 |

288.6000 |

0.4825 |

0.3624 |

0.1201 |

| 307.0000 |

0.1164 |

0.4007 |

0.5171 |

289.1000 |

0.4796 |

0.3635 |

0.1161 |

| 307.5000 |

0.1179 |

0.4016 |

0.5195 |

289.6000 |

0.4767 |

0.3646 |

0.1120 |

I used a spread sheet to show the basic relationships. For the left hand Aqua world, Ocean efficiency increases while Turbopause efficiency increases. The only limit is the 50% work done. At 303.5 K degrees, [1-Tc(oceans)/Th] + [1-Tc(turbo)] = 0.5 or 50% of the available energy. Remember that this is based solely on the two sink temperatures selected and the 50% requirement. The effective energy of a surface at 303.5 K degrees is 481 Wm-2 which would require half of the energy available meaning the energy available is 962 Wm-2 or 70.7% of the solar constant of 1361 Wm-2. Based on the simple Carnot Divider, the albedo or amount of energy reflected, should be 29.9% or the total energy available and just for fun, 29.9% of the available energy is 407 Wm-2. The Tc(turbo) is based on a best guess, not a boundary based on sound physics.

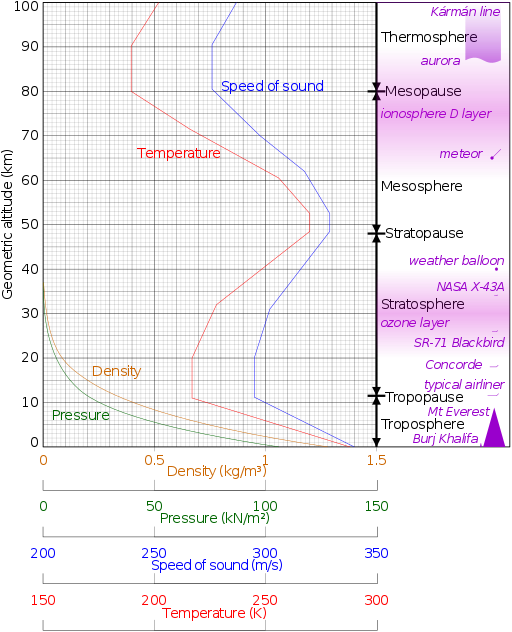

Tc(turbo) if you follow my ramblings, is equal to the lowest temperature ever measured at the surface of the Earth. A radiant reference "shell" should be perfectly isothermal. Any temperature below -89.2 would not encompass the total surface area of Earth. Tc(turbo) should be the first near ideal reference "shell" which also happens to be the approximate temperature of the Turbopause, where all turbulent mixing stops in the atmosphere approximately 100 kilometers above sea level. This reference shell is obscured by the stratosphere temperature inversion, but so far the Carnot Divider appears to agree with my choice of atmospheric sink temperature.

The right hand "Real World" uses the Carnot divider to estimate land surface temperature assuming the oceans provide a portion of the energy to the land mass. In this case the Carnot divider is a true divider. From Land Tc(turbo) efficiency increases with surface temperature while the efficiency of heat transfer from the oceans decreases with land surface temperature. At 50% combined efficiency, the land surface energy loss should be proportional to the total system loss. This indicates a land surface temperature of 285.6K (12.45 C) would be ideal. The "Ocean in" value is calculated using the Carnot Efficiency using the SST max to Land sink temperature with the ratio of ocean to land using the values at the top of the spread sheet. The 12.45 C is slightly higher than the best estimate of actual land surface temperature and the 14.225 C is lower than the best estimate of the actual ocean surface temperature, but considering the small number of assumptions made, the estimates are pretty impressive. Most importantly the 33C and Albedo assumptions where not used and the albedo was actually discovered, indicating that the guess of Tc(turbo) was a good one.

There are a few things that need to be considered with this simple model. The oceans system is enclosed in the atmospheric or Turbopause system. The efficiency of the oceans system is 0.1063 or 10.63% of the 481Wm-2 input energy which would be 51.1 Wm-2 converted into thermal energy in the oceans. That combined with the atmospheric work, 0.3937*481=189.4 equal 240.4 Wm-2 or the common value of the black body temperature of Earth which does not include the Turbopause shell effective energy of 65 Wm-2.

Since the Carnot Divider is based solely on temperatures, the 240.4 apparent black body energy plus the 65 Wm-2 Turbopause shell energy equal 305.4Wm-2 which would be a surface at a temperature at -2.24 C which is slightly lower than the -1.9 C Tc(oceans) value. That would indicate that some minor tweaking of the sink temperatures is in order to close the energy balance, but for a simple dumb model, I would think it is worth a little more investment in time.

Another thing for some of the Sky Dragon Slayers to consider is that the Turbopause "shell" temperature is dependent on the radiant properties of atmospheric gases, primarily CO2. This higher, colder "shell" or effective radiant layer is higher and colder than mentioned by the Climate Science Gurus, but is a purely radiant boundary.

Finally for this post, the albedo energy of 407Wm-2 plus the Turbopause shell radiant energy of 65 Wm-2 is equal to 472Wm-2. With 481 Wm-2 of work done in the systems via thermal energy, some portion of the 481-472=9 Wm-2 difference is likely due to non radiant energy input into the systems. That would be gravitational, rotational and geothermal energy which combined may be on the same order of magnitude as CO2 forcing is estimated.

Mo Carnot Stuff:

The Carnot Divider which uses one common source temperature with sink temperatures for two nested systems, produces what would be an ideal ratio of coupled system performance. For the Aqua World example, ocean heat transfer efficiency plus atmospheric heat transfer efficiency or Energy in (Ein) has to equal the combined system inefficiency energy out (Eout). If the sink temperatures are fixed, there is only one internal source temperature that will produce a "balanced" combined system. For a -1.9C ocean sink, Tc(oceans) and a -89.2 Atmosphere sink, Tc(Turbopause) the maximum common source temperature (Th) would be 30.35 C degrees.

If Th increases, the atmosphere would warm more quickly than the oceans because of the different internal system efficiencies, producing an internal imbalance. Since the ocean sink temperature is greater than the atmospheric sink temperature, where that imbalance exists and what would happen with an imbalance is not obvious. So let's consider the third wiper on the Carnot Divider Diagram. With Tc(oceans) = 271.25K and Tc(turbo) = 184K, the ocean sink to atmosphere sink efficiency is 1-184/271.25 = 0.322 or 32.2 % maximum efficiency. The Carnot efficiency calculation is for a maximum efficiency, not an actual efficiency. In a balanced system, the maximum heat transfer efficiency is 32.2 percent and with the source in this case being 271.25K degrees (307Wm-2), and the sink being 184K (65Wm-2), the total energy transfer would be 242 Wm-2 of which 32.2 percent would be 77Wm-2. The actual sink temperature is 184K (65Wm-2) which if the transfer were ideal would be 77Wm-2 or 192.5K degrees. There is possibly 12 Wm-2 less energy transferred than could be transferred.

There is more information in the ocean/atmosphere efficiency ratio. At the 50% Ein=Eout requirement the Ocean efficiency from 303.5K to the 271.25K sink is 10.63% and the 303.5K source to 184K sink is 39.37 percent. That ratio, 10.63/39.37=0.27, we can call the heat uptake ratio, which should be related to the specific heat capacities of the liquid (oceans) and gas (atmosphere) systems. The specific heat of dry air is approximately 1.06 Joules per gram K at sea level and 273.15 K degrees and the specific heat capacity of salt water at 273.15K degree is 3.98 Joules per gram K at 273.16K degrees which would be a ratio of 26.6 percent.

The Carnot Divider does not provide much information on the various internal states the system may take or the settling time between states, but is does indicate that there is a fairly narrow stable range dependent on maximum temperatures, not averages, and that specific heat capacity is a major consideration in the range of thermal efficiencies.

Mo Carnot Update:

|

|

Aqua World Salt |

|

|

|

|

Aqua World Fresh |

|

|

|

Ocean Sink |

Turbo Sink |

Ocean Max |

Ocean Ave. |

|

Ocean Sink |

Turbo Sink |

Ocean Max |

Ocean Ave. |

| C degrees |

-2.0000 |

-89.1500 |

30.2500 |

14.1250 |

C degrees |

0.0000 |

-89.1500 |

31.5500 |

15.7750 |

| K Degrees |

271.1500 |

184.0000 |

303.4000 |

287.275 |

K Degrees |

273.1500 |

184.0000 |

304.7000 |

288.925 |

| Wm-2 (eff) |

306.4937 |

64.9912 |

480.4469 |

386.1663 |

Wm-2 (eff) |

315.6370 |

64.9912 |

488.7344 |

395.1150 |

| Work |

51.0692532146 |

189.0750025992 |

|

|

Work |

50.6057406151 |

193.6010425433 |

|

|

| Percentage |

|

%Ocean |

|

%Turbo |

Percentage |

|

%Ocean |

|

%Turbo |

| 0.5 |

|

0.1062953197 |

|

0.3935398813 |

0.5 |

|

0.10354447 |

|

0.3961273384 |

| Combined |

|

Tc(ocean) |

|

Tc(Turbo) |

Combined |

|

Tc(ocean) |

|

Tc(Turbo) |

| 303.4 |

|

271.15 |

|

184 |

304.7 |

|

273.15 |

|

184 |

It looks like I got the size wrong, but this compares the Aqua World in salt and fresh versions. If the Turbopause sink is fixed, then there would be a different control range for a salt and fresh ocean world. The ideal maximum SST for Salt Aqua World would be 303.4 K degrees (note I added one significant digit) and the Fresh Aqua World would have an ideal maximum SST of 304.7 K degrees. A 1.9 K degree change in the ocean sink would produce a 1.3K change in the maximum SST. Fresh water flooding is often mentioned as a cause of THC stalling, but according to the simple Carnot Divider, the change in the freezing point would have a similar impact without all the complex modeling requirements.

I hope some of you could follow how effective even the simplest of models can be. When a Carnot efficiency model can get this close it says something about the simplicity of the Earth Climate System once you eliminate the noise.

Note: I may come back to proof this later since this is a work in progress, so watch out for typos and mistakes.

I am finished with this one. Now that I have double checked the "Real World" side of the first spread sheet I think there can be some improvements, but those will require actual area and shape considerations, for a simple model though, it is pretty amazing.