New Computer Fund

Tuesday, February 28, 2012

What do You Choose to Believe?

The Medieval Warm Period was only a regional event. If that is your belief, then warming in the 20th century has to be abnormal and require explanation. The chart above is my mine using the South American Temperature Reconstruction of R. Neukom1, J. Luterbacher2, R. Villalba3, M. Küttel1,4, D. Frank5, P.D. Jones, M. Grosjean1, H. Wanner1, J.-C. Aravena7, D.E. Black8, D.A. Christie9, R. D'Arrigo10, A. Lara9,11, M. Morales3, C. Soliz-Gamboa12, A. Srur3, R. Urrutia9, and L. von Gunten1,13. I don't know any of those people, but they seem to believe that the Medieval Warm Period was not a regional event. Looking at their data, South America has been warming since the 1400s. South American may have had its MWP, just a little out of sequence with some parts of North America.

" Although we conclude, as found elsewhere, that recent warming has been substantial relative to natural fluctuations of the past millennium, we also note that owing to the spatially heterogeneous nature of the MWP, and its different timing within different regions, present palaeoclimatic methodologies will likely "flatten out" estimates for this period relative to twentieth century warming, which expresses a more homogenous global "fingerprint." Therefore we stress that presently available paleoclimatic reconstructions are inadequate for making specific inferences, at hemispheric scales, about MWP warmth relative to the present anthropogenic period and that such comparisons can only still be made at the local/regional scale." That quote is by D'Arrigo, R.; Wilson, R.J.S.; Jacoby, G.C. from their abstract in the D'Arrigo et al. 2006 Northern Hemisphere Tree-Ring-Based STD and RCS Temperature Reconstructions.

If you focus on regions, your belief would be that AGW is not as much of a factor as natural warming, recovery from colder times caused by the Little Ice Age. If you look at the massive land use changes in the Northern Hemisphere, nearly 3% of the total surface area of the globe converted from wilderness to human habitat, you would assume there is some Anthropogenic warming that is beneficial and needed.

If you focus on CO2, you can determine that CO2 is causing global warming and that it is bad, because it is unintentional.

If you look at everything objectively, you may believe that some warming is good, some is natural and some may be bad, but we really don't know how much of any warming is due to any specific cause.

To my mind, having any opinion other than we don't really know, requires belief, not science.

Monday, February 27, 2012

Volcanoes, Ice Ages and Average Temperatures?

Michael Mann has a post on Real Climate on his new paper about the Little Ice Age and volcanoes. The image above is from that post with part of the text to explain the different plots.

This is a plot I made of GISS temperatures I downloaded for the NASA GIStemp site. Since the data for the Antarctic starts around 1902, I averaged all the latitude bands for the period 1902 to 2011 and plotted the global temperature average with and without the poles. With those is a plot of the average of the two poles alone. You can see the familiar shape of the global temperature average in the average of the pole much more clearly than in the global averages as this chart is scaled. For simplicity, the data is plotted "as is" from the GIStemp site in hundreds of degrees. So 200 would actually be two degrees.

While looking into the Siberian agricultural impact on northern hemisphere temperature I noticed that regional volcanoes had a strong impact on temperatures. Kamchatka, the Kurril Islands, Iceland, the Aleutian Islands and Alaska mainly, though Washington State and Japanese volcanoes also have some impact.

Here the Arctic and Antarctic are plotted separately with the global no poles. The Antarctic data is not all that great because of conditions and it is pretty obvious that the fluctuations in measurements start decreasing as we approach the 1960s. What is particularly interesting, is that the 1960 to present period shows a large increase in temperature that is not evident in any of the satellite records.

Here I have plotted the Global average using 1902 to 2011 base period without the Antarctic. The Antarctic is still on the plot so the pre-1960s noise really stands out. Polar amplification of the Greenhouse Effect is projected and a big deal. Other than the surface temperature records, there has been no measurable warming in the Antarctic and the surface stations in the Antarctic are notorious for having issues with being covered with snow drifts which can cause higher than average temperatures of not covered which could give the impression of variability that does not exist. Such is life, but how much impact could errors have on the global temperature average?

First Attribution:

Southern South America Multiproxy 1100 Year Temperature Reconstructions

-----------------------------------------------------------------------

World Data Center for Paleoclimatology, Boulder

and

NOAA Paleoclimatology Program

-----------------------------------------------------------------------

NOTE: PLEASE CITE ORIGINAL REFERENCE WHEN USING THIS DATA!!!!!

NAME OF DATA SET:

Southern South America Multiproxy 1100 Year Temperature Reconstructions

LAST UPDATE: 3/2010 (Original receipt by WDC Paleo)

CONTRIBUTORS:

Neukom, R., J. Luterbacher, R. Villalba, M. Küttel, D. Frank,

P.D. Jones, M. Grosjean, H. Wanner, J.-C. Aravena, D.E. Black,

D.A. Christie, R. D'Arrigo, A. Lara, M. Morales, C. Soliz-Gamboa,

A. Srur, R. Urrutia, and L. von Gunten.

IGBP PAGES/WDCA CONTRIBUTION SERIES NUMBER: 2010-031

WDC PALEO CONTRIBUTION SERIES CITATION:

Neukom, R., et al. 2010.

Southern South America Multiproxy 1100 Year Temperature Reconstructions.

IGBP PAGES/World Data Center for Paleoclimatology

Data Contribution Series # 2010-031.

NOAA/NCDC Paleoclimatology Program, Boulder CO, USA.

ORIGINAL REFERENCE:

Neukom, R., J. Luterbacher, R. Villalba, M. Küttel, D. Frank,

P.D. Jones, M. Grosjean, H. Wanner, J.-C. Aravena, D.E. Black,

D.A. Christie, R. D'Arrigo, A. Lara, M. Morales, C. Soliz-Gamboa,

A. Srur, R. Urrutia, and L. von Gunten. 2010.

Multiproxy summer and winter surface air temperature field

reconstructions for southern South America covering the past centuries.

Climate Dynamics, Online First March 28, 2010,

DOI: 10.1007/s00382-010-0793-3

I hope that covers everyone :) The comparison of the GISS Antarctic region versus the temperature reconstruction by all those guys, does look to me to be all that great of a match. Polar amplification due to greenhouse gas forcing can have a large impact on global temperature. Polar amplification due to poor instrumentation can also have a large impact on global temperature. Which is which in this case, seems to go to the poor instrumentation part of the puzzle. There reconstruction uses tree rings which are not thermometers, so one would be more likely to trust the instrumentation, that is not always the best choice though. Trust nothing - verify everything.

This post is just on some of the questions I have on what data should have more weight in determining average global conditions. The long term tree ring proxies do not provide a good range of temperatures, but they should provide a fair indication of what "average" conditions should be.

Saturday, February 25, 2012

The Tropopause and the 4C Ocean Boundary Layers

I have a nasty habit of comparing the the Tropopause and the 4C ocean thermal boundary layer in a way that is not very clear. This is mainly due to my looking at the situation more as a puzzle than a serious fluid dynamics problem. As I mentioned in a previous post, I am looking for a simple back of the envelope method of proving the limits of CO2 radiant forcing to a reasonable level of accuracy.

The main similarity is that both are thermal boundaries with sufficiently large sink capacity to buffer changes in radiant forcing. Their mechanisms are different but the impacts are very similar.

The ocean 4C boundary is a combination of thermal and density mechanisms that result in interesting thermal properties. Warming the 4C boundary from above results in upward convection which tends to reduce the impact of the warming. The heat loss from the 4C layer has to be from warmer, 4C to colder but also has to allow for constant density. If not, there would be turbulent mixing and there would be no 4C boundary layer.

So cooling or actually maintenance, of the 4C boundary occurs mainly in the Antarctic region where the air temperature is cold enough to cause the formation of sea ice. This also occurs in the Arctic, but seasonal melting produce less dense fresh water that has to mix, with turbulence, with the denser saltwater. If there were no turbulent mixing there would be lens of fresh water constantly in Arctic summer. In the Antarctic, much more of the sea ice survives the summer months, so there is continuous replenishment of the 4C maximum density salt water slowly sinking in the southern pole that creates the deep ocean currents. Turbulent warming of the 4C layer in or near the tropics causes rising convection from the 4C boundary layer which impacts the rate of replenishment from both poles. It is a very elegant thermostat for the deep oceans, laminar replenishment versus turbulent withdrawal.

The Tropopause is similar but different. Non-condensation greenhouse gas radiant forcing balance conductive, convective and latent cooling response. The Antarctic winter conditions are controlled by the non-condensible radiant effect primarily which result in a maximum low temperature equal to the amount of non-condensible radiant forcing for that temperature range.

The lowest temperature ever recorded in the Antarctic is about -90C and that would be the lowest temperature in the Tropopause if it were not for non-radiant energy flux. The average temperature of the Tropopause is closer to -60C, which indicates that the average impact on non-radiant energy flux is on the order of 30C in the Tropopause. That is a fairly large buffer range. In addition to that range, the Tropopause altitude can vary so for short term perturbations, the temperature can drop to -100C possibly a little more. The Tropopause temperature cannot decrease much lower because stratospheric warming due to ultra violent solar radiation interacting with oxygen in the dry region above the Tropopause.

Non-interactive outgoing long wave radiation, the atmospheric window portion of the spectrum is less in the Antarctic due to the Stefan-Boltzmann relationship, ans should be on the order of 9Wm-2 at -90C implying that the actual CO2 portion of the Tropopause limit is on the order of 55 to 60 Wm-2. As more CO2 forcing is applied, the percentage of non-interactive OLR would increase, offsetting approximately 15% of the impact.

As surface temperature increases, the non-interactive response would continue to offset approximately 15% or the non-condensible GHG forcing and the conductive/convective and latent fluxes would offset more with the changes in temperature, gas mix and pressure. Convection, which is a function of temperature, density and conductive properties, is the non-linear part of the puzzle that causes the uncertainty in a pure energy perspective while albedo, surface and atmospheric, change just adds a new layer of complexity.

In both the 4C and tropopause boundary layers, virtually immeasurable changes can have a significant impact on the heat sink capacity of each and each have extremely different time constants. More complexity, making this an outstanding puzzle!

So this post hopefully will explain why I compare these two thermodynamic layers as I do, though the mechanisms are very different.

The main similarity is that both are thermal boundaries with sufficiently large sink capacity to buffer changes in radiant forcing. Their mechanisms are different but the impacts are very similar.

The ocean 4C boundary is a combination of thermal and density mechanisms that result in interesting thermal properties. Warming the 4C boundary from above results in upward convection which tends to reduce the impact of the warming. The heat loss from the 4C layer has to be from warmer, 4C to colder but also has to allow for constant density. If not, there would be turbulent mixing and there would be no 4C boundary layer.

So cooling or actually maintenance, of the 4C boundary occurs mainly in the Antarctic region where the air temperature is cold enough to cause the formation of sea ice. This also occurs in the Arctic, but seasonal melting produce less dense fresh water that has to mix, with turbulence, with the denser saltwater. If there were no turbulent mixing there would be lens of fresh water constantly in Arctic summer. In the Antarctic, much more of the sea ice survives the summer months, so there is continuous replenishment of the 4C maximum density salt water slowly sinking in the southern pole that creates the deep ocean currents. Turbulent warming of the 4C layer in or near the tropics causes rising convection from the 4C boundary layer which impacts the rate of replenishment from both poles. It is a very elegant thermostat for the deep oceans, laminar replenishment versus turbulent withdrawal.

The Tropopause is similar but different. Non-condensation greenhouse gas radiant forcing balance conductive, convective and latent cooling response. The Antarctic winter conditions are controlled by the non-condensible radiant effect primarily which result in a maximum low temperature equal to the amount of non-condensible radiant forcing for that temperature range.

The lowest temperature ever recorded in the Antarctic is about -90C and that would be the lowest temperature in the Tropopause if it were not for non-radiant energy flux. The average temperature of the Tropopause is closer to -60C, which indicates that the average impact on non-radiant energy flux is on the order of 30C in the Tropopause. That is a fairly large buffer range. In addition to that range, the Tropopause altitude can vary so for short term perturbations, the temperature can drop to -100C possibly a little more. The Tropopause temperature cannot decrease much lower because stratospheric warming due to ultra violent solar radiation interacting with oxygen in the dry region above the Tropopause.

Non-interactive outgoing long wave radiation, the atmospheric window portion of the spectrum is less in the Antarctic due to the Stefan-Boltzmann relationship, ans should be on the order of 9Wm-2 at -90C implying that the actual CO2 portion of the Tropopause limit is on the order of 55 to 60 Wm-2. As more CO2 forcing is applied, the percentage of non-interactive OLR would increase, offsetting approximately 15% of the impact.

As surface temperature increases, the non-interactive response would continue to offset approximately 15% or the non-condensible GHG forcing and the conductive/convective and latent fluxes would offset more with the changes in temperature, gas mix and pressure. Convection, which is a function of temperature, density and conductive properties, is the non-linear part of the puzzle that causes the uncertainty in a pure energy perspective while albedo, surface and atmospheric, change just adds a new layer of complexity.

In both the 4C and tropopause boundary layers, virtually immeasurable changes can have a significant impact on the heat sink capacity of each and each have extremely different time constants. More complexity, making this an outstanding puzzle!

So this post hopefully will explain why I compare these two thermodynamic layers as I do, though the mechanisms are very different.

Friday, February 24, 2012

Peter Gleick and the Heartland Dust Up.

I am not even going to bother providing links, but Peter Gleick seems to have a hard on for the Heartland Institute big enough to cause him to risk his career. The Heartland Institute is a conservative think tank that takes on just about any cause that tends to push the envelop of common sense legislation.

As far as I am concerned that is a worthy cause. Like it or not people are allowed to have opinions and have a God (or evolutionary) given right to be stupid. One of the basic principles lost on most warm and fuzzy do-gooders, is people have just as much right to fail as they do to succeed. Face it, if was not for people too lazy, stupid, or gullible to do every thing for themselves, there would be no success stories. If everyone was a genius, who would need a genius?

Some people seem to believe that success is pointing out each and every flaw that another group of people may have. Vegetarians are excellent in pointing out that meat eaters are more likely to die at a younger age than vegetarians. If they had their way, they would criminalize meat consumption.

Health food fans would point out that butter, fat, ice cream, Danishes and the worst health offender of all, the Bacon-Cheese Biscuit are bad for you and would probably not get up in arms if any or all of these bad things were illegal. Then everyone could be healthy and pumping iron while drinking some nasty looking greenish red juice.

Each group, once a little radical thinking creeps in, views Utopia as being filled with like minded people. Groups like the Heartland Institute help ensure that Bacon-Cheese Biscuit lovers can enjoy a Bacon-Cheese Biscuit not only in the privacy or their own home, but out in public for all to see how foolish they are sacrificing their chance to be the next Sports Illustrated swim suit model. Unless you are of the opinion that everyone must agree on everything, you should appreciate groups like the Heartland Institute that endeavor to allow people to be themselves.

Do you need to agree with the Heartland Institute's choice of causes? Of course not, you can have your own causes only because there are enough stupid people to not believe everything that you believe.

Many of the people that despise the Heartland Institute probably believe that something that is not totally legal in our society should be legalized. There is probably an organization trying to legalize whatever that may be. Some will be successful, some won't, but they all have the right to try. As long as they do not infringe on the rights of others in the process.

Is that not a cool idea? As long as you don't infringe on the rights of others, do what you like and enjoy life?

This brings me back to the right to be stupid. The warm and fuzzies think that anything that may possibly cause them some manner of harm should be illegal. So if you do not take every precaution to not do them any manner of harm, you should suffer the consequences.

If you happen to drive a vehicle without wearing your seat belt, you DESERVE to be fined. Not because that directly harms them, but because it may cost them more taxes to provide for health care. Having sex with more than one life partner also can be proven statistically to do much more harm to the public in general than driving sans seat belt. The mortality rate of male homosexuals is greater than the national average and their sexual life style may pose a risk to the public if they are not registered as homosexuals or at least required to wear lavender and sing show tunes in public. If you smoke, you are of course a deviant and should be taxed and shunned by society, and fined if you happen to light up in public. If you drink more alcohol you are likely to do something that will cause harm to someone or yourself, so that should be illegal. If you have red hair and fair skin, you have a greater risk of cancer and of being a comedian. If you are Jewish, you have a greater risk of being a doctor, lawyer, banker or comedian. If you are black, you have a great risk of being an athlete, musician or comedian. If you are a warm and fuzzy that thinks everyone should be like you, you are a comedian, only you haven't realized it quite yet.

The whole object of a society is not to have one winner, but to have a diversity. Good, bad or indifferent, as long as your real rights are not infringed upon, shut up, enjoy life and let others do the same.

Rant over.

As far as I am concerned that is a worthy cause. Like it or not people are allowed to have opinions and have a God (or evolutionary) given right to be stupid. One of the basic principles lost on most warm and fuzzy do-gooders, is people have just as much right to fail as they do to succeed. Face it, if was not for people too lazy, stupid, or gullible to do every thing for themselves, there would be no success stories. If everyone was a genius, who would need a genius?

Some people seem to believe that success is pointing out each and every flaw that another group of people may have. Vegetarians are excellent in pointing out that meat eaters are more likely to die at a younger age than vegetarians. If they had their way, they would criminalize meat consumption.

Health food fans would point out that butter, fat, ice cream, Danishes and the worst health offender of all, the Bacon-Cheese Biscuit are bad for you and would probably not get up in arms if any or all of these bad things were illegal. Then everyone could be healthy and pumping iron while drinking some nasty looking greenish red juice.

Each group, once a little radical thinking creeps in, views Utopia as being filled with like minded people. Groups like the Heartland Institute help ensure that Bacon-Cheese Biscuit lovers can enjoy a Bacon-Cheese Biscuit not only in the privacy or their own home, but out in public for all to see how foolish they are sacrificing their chance to be the next Sports Illustrated swim suit model. Unless you are of the opinion that everyone must agree on everything, you should appreciate groups like the Heartland Institute that endeavor to allow people to be themselves.

Do you need to agree with the Heartland Institute's choice of causes? Of course not, you can have your own causes only because there are enough stupid people to not believe everything that you believe.

Many of the people that despise the Heartland Institute probably believe that something that is not totally legal in our society should be legalized. There is probably an organization trying to legalize whatever that may be. Some will be successful, some won't, but they all have the right to try. As long as they do not infringe on the rights of others in the process.

Is that not a cool idea? As long as you don't infringe on the rights of others, do what you like and enjoy life?

This brings me back to the right to be stupid. The warm and fuzzies think that anything that may possibly cause them some manner of harm should be illegal. So if you do not take every precaution to not do them any manner of harm, you should suffer the consequences.

If you happen to drive a vehicle without wearing your seat belt, you DESERVE to be fined. Not because that directly harms them, but because it may cost them more taxes to provide for health care. Having sex with more than one life partner also can be proven statistically to do much more harm to the public in general than driving sans seat belt. The mortality rate of male homosexuals is greater than the national average and their sexual life style may pose a risk to the public if they are not registered as homosexuals or at least required to wear lavender and sing show tunes in public. If you smoke, you are of course a deviant and should be taxed and shunned by society, and fined if you happen to light up in public. If you drink more alcohol you are likely to do something that will cause harm to someone or yourself, so that should be illegal. If you have red hair and fair skin, you have a greater risk of cancer and of being a comedian. If you are Jewish, you have a greater risk of being a doctor, lawyer, banker or comedian. If you are black, you have a great risk of being an athlete, musician or comedian. If you are a warm and fuzzy that thinks everyone should be like you, you are a comedian, only you haven't realized it quite yet.

The whole object of a society is not to have one winner, but to have a diversity. Good, bad or indifferent, as long as your real rights are not infringed upon, shut up, enjoy life and let others do the same.

Rant over.

Wednesday, February 15, 2012

Even More Calibrating Imperfection

What happens when you compare the Atlantic Multidecadal Index, Taymyr Peninsular tree ring reconstruction, the Central England temperature record, Volcanic Explosion Indexs and a few Siberian instrumental records? You crash the heck out of Opensource spreadsheets! You do get some interesting stuff though.

One of the biggest problems was that the AMO and the Taymyr had a pretty good correlation but a few things that looked goofy. The CET also match in a lot of places, but missed the boat in quite a few. So I averaged the AMO and the CET and compared to the Volcanic data available from the Global Vulcanism Program. The older major eruptions had a lot of estimates and questions on the dates. I just used the estimated date and best guess for the magnitude of the eruptions and used a 17 year moving average. Pretty crude, but it gives a rough idea of the impact of the Vulcanism on the AMO, mainly for the equatorial eruptions and the CET (March-April-May)/Taymyr for the northern hemisphere smaller eruptions.

The Average (1) curve is the average of the anomalies for three Siberian surface stations, Ostrov Dikson near Taymyr, Turuhansk, a little south of Taymyr and Pinofilovo which is in the major farming region of Siberia. The Pinofilovo station is plotted by itself to highlight how it tends to break the trends starting in around 1900. Far from conclusive that agricultural expansion is a major cause of the Siberian warming, but getting much closer to being somewhat convincing.

Russian records are pitiful, but there was a general expansion into the Siberian region at around 1900 and another push starting in the late 1950s. Russian production per hectare was about 30% to 50% less than in the US even though they exported more than the US in the early 1900s. One report for 2011 stated that Russian was planting 500,000 hectares of winter wheat. That is only 5,000 kilometers squared, but that should be about half of the acreage set aside for winter wheat allowing for crop rotation. There is also considerable barley, sugar beets and rye, for pasture and rotation, with about 8% to 10% of the total land area of Russia devoted to arable land. This is a smaller area than in the US but near the area in Canada used for grain crops. Canada also has a higher temperature trend in its interior farm belt than the global average.

The major albedo impact of large scale grain farming is two to three months per year, planting and harvest, where the land can absorb around 20Wm-2 more than it would in a virgin state. Since Russian records are so bad, I will have to use Canadian lands near the same latitude and attempt to interpolate the impact to Russia.

More: From Wikipedia, Total land under cultivation, 17,298,900km^2 or 11.61% of the land surface area. Russia, 1,192,300km^2, Canada, 474,681 km^2, United States, 1,669,302 km-2 and China, 1,504,350 km-2 totaling 4,840,633 km^2 which is 3.25% of the land surface area or 0.95% of the total surface of the globe. This doesn't include the second largest agricultural country India as I am mainly interested in impact above latitude 45 degrees North. This should include Kazakhstan and the Ukraine, with nearly 600,000 km^2, but I intend for their area to offset the southern portion of the US.

On a yearly average, the northern latitude albedo change due to agriculture would have to produce slightly over 1 Wm-2 to offset 50% of the estimated warming due to increased atmospheric CO2. Since the major impact of agriculture is only during the growing season and peaking in only about 2 months of that period, the impact during that time would need to be approximately 6 Wm-2.

One of the biggest problems was that the AMO and the Taymyr had a pretty good correlation but a few things that looked goofy. The CET also match in a lot of places, but missed the boat in quite a few. So I averaged the AMO and the CET and compared to the Volcanic data available from the Global Vulcanism Program. The older major eruptions had a lot of estimates and questions on the dates. I just used the estimated date and best guess for the magnitude of the eruptions and used a 17 year moving average. Pretty crude, but it gives a rough idea of the impact of the Vulcanism on the AMO, mainly for the equatorial eruptions and the CET (March-April-May)/Taymyr for the northern hemisphere smaller eruptions.

The Average (1) curve is the average of the anomalies for three Siberian surface stations, Ostrov Dikson near Taymyr, Turuhansk, a little south of Taymyr and Pinofilovo which is in the major farming region of Siberia. The Pinofilovo station is plotted by itself to highlight how it tends to break the trends starting in around 1900. Far from conclusive that agricultural expansion is a major cause of the Siberian warming, but getting much closer to being somewhat convincing.

Russian records are pitiful, but there was a general expansion into the Siberian region at around 1900 and another push starting in the late 1950s. Russian production per hectare was about 30% to 50% less than in the US even though they exported more than the US in the early 1900s. One report for 2011 stated that Russian was planting 500,000 hectares of winter wheat. That is only 5,000 kilometers squared, but that should be about half of the acreage set aside for winter wheat allowing for crop rotation. There is also considerable barley, sugar beets and rye, for pasture and rotation, with about 8% to 10% of the total land area of Russia devoted to arable land. This is a smaller area than in the US but near the area in Canada used for grain crops. Canada also has a higher temperature trend in its interior farm belt than the global average.

The major albedo impact of large scale grain farming is two to three months per year, planting and harvest, where the land can absorb around 20Wm-2 more than it would in a virgin state. Since Russian records are so bad, I will have to use Canadian lands near the same latitude and attempt to interpolate the impact to Russia.

More: From Wikipedia, Total land under cultivation, 17,298,900km^2 or 11.61% of the land surface area. Russia, 1,192,300km^2, Canada, 474,681 km^2, United States, 1,669,302 km-2 and China, 1,504,350 km-2 totaling 4,840,633 km^2 which is 3.25% of the land surface area or 0.95% of the total surface of the globe. This doesn't include the second largest agricultural country India as I am mainly interested in impact above latitude 45 degrees North. This should include Kazakhstan and the Ukraine, with nearly 600,000 km^2, but I intend for their area to offset the southern portion of the US.

On a yearly average, the northern latitude albedo change due to agriculture would have to produce slightly over 1 Wm-2 to offset 50% of the estimated warming due to increased atmospheric CO2. Since the major impact of agriculture is only during the growing season and peaking in only about 2 months of that period, the impact during that time would need to be approximately 6 Wm-2.

Monday, February 13, 2012

Calibrating Imperfection?

The Taymyr Peninsular in Siberia has a reasonably long Russian Larch tree ring proxy compiled by Jacoby and gang which is dated 2006 and archived on the NOAA paleo site. The series ends in 1970 because Jacoby and gang determined that the proxy diverged from temperature starting in that date.

Ostrov Dikson is a small Russian town located on an island at the west side of the Taymyr Peninsular. It has an airport and a temperature record starting in 1918. There are three data points for the March through May average that are missing. I interpolated those for the average of the preceding and following values just for the spread sheet.

Using the period of overlap, 1918 to 1970, I shifted the Ostrov series so that the average of the overlap period aligned with the Taymyr data. The yellow mean for Taymyr and the green mean for Ostrov are shown on the chart.

The mean for Ostrov Dikson from 1970 to present is shown in red and is approximately 0.6 degrees higher than the 1918 to 1970 mean. The full series mean of Taymyr in blue, is about 0.4 degrees lower than the 1918 to 1970 mean.

The best average growth rate for the Taymyr series is near the 1918 to 1970 mean, with 1918 to 1950 being close to the optimum growth rate for the series. That should indicate than the period between 1918 and 1950 to 1970 should be the near the optimum conditions, temperature, precipitation etc. for the type of tree.

This chart shows the entire period of the Taymyr tree ring series.

Above I have added the central England temperature centered on the 1918 to 1970 period so that all three series have a common base line. The match, considering trees are not thermometers is pretty good. With the CET series add, there are periods were temperatures above the mean correspond with reduced tree growth and period where when temperatures are below the mean there is reduced tree growth. While the tree rings series do not provide a great deal of temperature information, they do have potential to provide more information about what is average global temperature, at least as I interpret the data.

Note: the CET data is also March through May.

Sunday, February 12, 2012

Comparing Imperfection

If anything is imperfect it is the temperature series and the tree ring reconstructions of temperature. In the chart above I have the Taymyr Peninsular tree ring series that I got from the NOAA data base provided by Jacoby et. al, the Central England Temperature series provided by UK MET and the GISS global average temperature anomaly series provided by NASA GISS. There is also an average series which is the average of the Taymyr and CET for the period 1600 to 1970 where both series had data. The mean for each series (horizontal lines) were lined up by shifting the Taymyr and Giss series up to the mean of the CET based on the full length of each series. The GISS series has a much greater upward slope in this chart.

In this chart the start date is 1800 which is when I suspect agricultural expansion may have played some part in the warming in the northern hemisphere. In this case, GISS and the average are very similar in slope. The taymyr slope is the greatest which I suspect is due to better growing conditions in part due to agricultural expansion in Europe. Note that the means of the series are different because of the changed periods.

This chart has the 1880 start time of all series. Note that the means are even more different because of the reduced period.

This chart has the 1880 start with the means adjusted for the period. The GISS mean had to be shift upward by about 0.4 degrees to adjust the means and the Taymyr required downward adjustment of about 0.1 degrees.

While this proves absolutely nothing, it does indicate to me that there should be some uncertainty in what "average" is for the global and northern hemisphere temperatures.

Update: The following is full series length chart but with the 1880 to end of series mean used to align the series.

Note: chart was updated due to error.

Saturday, February 11, 2012

Comparing Perfection

Perfection is a tool in commonly used in thermodynamics and science, in general to determine limits of expectation. Perfection does not exist, but functions can approach perfection.

The concept of perfection is on occasion misinterpreted, not only by the lay public, but the scientists using the concept. The Carnot Engine, is a classic example of perfection as a tool. A Carnot engine perfectly converts energy into work. The efficiency of an engine is how close it performs with respect to the Carnot engine as a percentage. The amount of energy not converted to useful work, entropy, is the difference between perfection and reality. Studying the climate of Earth, perfection has to be considered but there is another puzzle piece that also needs to be considered, perfect imperfection, or maximum entropy.

Maximum entropy may be considered as the point of worst performance where the system does not destroy itself. For an object radiating electromagnetic energy to space, that may be the ideal gray body.

All objects in space will emit the same amount of energy they absorb over sufficient time. A perfect black body, described by the Stefan-Boltzmann equation E=e*sigma*(T)^4, where sigma is the Stefan-Boltzmann constant. E is energy in joules/sec, T is degrees Kelvin and e is the emissivity or the amount not absorbed or emitted by the less than ideal gray body. For the purposes of comparison, a gray body with an emissivity of 50% could be considered an ideal gray body.

Using the Stefan-Boltzmann equation, the chart about shows the relationship of emission and transmission. In this case the surface energy is represented by the E-value or the energy emitted at the surface and the T-value is the energy transmitted to space from some point above the surface. If there were no atmosphere, the E-value would be constant. With an atmosphere, the energy emitted from the surface interacts with the atmosphere transferring energy into heating the atmosphere. The point where the E-value and the T-value equal is what may be considered a perfect gray body.

Also on the chart is the R-value, or the energy one would expect to be transferred from a source at one temperature to a sink at another temperature. The R-valueis equal to the difference in temperature between the source of the energy flux and the sink of the energy flow divided by the energy flux through the barrier or layers of barriers.

Since the atmosphere of the Earth has a Tropopause, a layer in the lower atmosphere with a minimum temperature, the R-value is based on that approximate temperature, 182K at 62Wm-2 equal to approximately -91 degrees C. This value is slightly lower than the minimum temperature measured on the surface of the Earth and slightly higher than the minimum temperature measured in the Tropopause. If the R-value intersected the crossover of the E and T values, I would consider that to be a perfect gray body adjusted for thermal conductivity.

In this chart, the E and T values have been shift upwards so that all three curves cross at the same point, approximately 0.60, a value very close to the measure emissivity of the Earth viewed from space. Flowing the E-value to the right, at 288K degrees, the surface emissivity is approximately 0.9996 at 335Wm-2 at 277K degrees. So for this simple model, since the value of an ideal black body cannot exceed 1, the "effective" surface emission temperature is approximately 277K degrees. The estimated actual surface temperature of the Earth is 288K with emission of approximately 390Wm-2, the difference, 11K and 55Wm-2 could be considered energy flux from the surface that does not interact with the atmosphere.

This model provides some interesting potential. First, the cross over is at 0.6 instead of 0.609 the actual measured average emissivity of the Earth. The temperature of the cross over is 208K with a flux of 102Wm-2 approximately, indicating an imbalance of approximately 0.9 Wm-2, the modeled value of the energy imbalance use by Kiehl and Trenberth in their Earth energy budget.

By adjusting the the curves so that they intersect at 0.609, there is no energy imbalance. This required changing the tropopause temperature by 0.2 degrees K and changing the T and E offset value from 0.185 to 0.20, which is Planck parameter or Planck response made somewhat famous in the Monckton Lucia dust up.

To indicate what relatively small changes in the troposphere temperature can have on the Planck response and energy imbalance:

In the above chart the Tropopause temperature used is 190K

In this chart the tropopause temperature is shift to 173K or 100C.

In the last two charts a %I curve was added to illustrate the relative changes in thermal conductivity of the atmosphere with tropopause temperature.

The concept of perfection is on occasion misinterpreted, not only by the lay public, but the scientists using the concept. The Carnot Engine, is a classic example of perfection as a tool. A Carnot engine perfectly converts energy into work. The efficiency of an engine is how close it performs with respect to the Carnot engine as a percentage. The amount of energy not converted to useful work, entropy, is the difference between perfection and reality. Studying the climate of Earth, perfection has to be considered but there is another puzzle piece that also needs to be considered, perfect imperfection, or maximum entropy.

Maximum entropy may be considered as the point of worst performance where the system does not destroy itself. For an object radiating electromagnetic energy to space, that may be the ideal gray body.

All objects in space will emit the same amount of energy they absorb over sufficient time. A perfect black body, described by the Stefan-Boltzmann equation E=e*sigma*(T)^4, where sigma is the Stefan-Boltzmann constant. E is energy in joules/sec, T is degrees Kelvin and e is the emissivity or the amount not absorbed or emitted by the less than ideal gray body. For the purposes of comparison, a gray body with an emissivity of 50% could be considered an ideal gray body.

Using the Stefan-Boltzmann equation, the chart about shows the relationship of emission and transmission. In this case the surface energy is represented by the E-value or the energy emitted at the surface and the T-value is the energy transmitted to space from some point above the surface. If there were no atmosphere, the E-value would be constant. With an atmosphere, the energy emitted from the surface interacts with the atmosphere transferring energy into heating the atmosphere. The point where the E-value and the T-value equal is what may be considered a perfect gray body.

Also on the chart is the R-value, or the energy one would expect to be transferred from a source at one temperature to a sink at another temperature. The R-valueis equal to the difference in temperature between the source of the energy flux and the sink of the energy flow divided by the energy flux through the barrier or layers of barriers.

Since the atmosphere of the Earth has a Tropopause, a layer in the lower atmosphere with a minimum temperature, the R-value is based on that approximate temperature, 182K at 62Wm-2 equal to approximately -91 degrees C. This value is slightly lower than the minimum temperature measured on the surface of the Earth and slightly higher than the minimum temperature measured in the Tropopause. If the R-value intersected the crossover of the E and T values, I would consider that to be a perfect gray body adjusted for thermal conductivity.

In this chart, the E and T values have been shift upwards so that all three curves cross at the same point, approximately 0.60, a value very close to the measure emissivity of the Earth viewed from space. Flowing the E-value to the right, at 288K degrees, the surface emissivity is approximately 0.9996 at 335Wm-2 at 277K degrees. So for this simple model, since the value of an ideal black body cannot exceed 1, the "effective" surface emission temperature is approximately 277K degrees. The estimated actual surface temperature of the Earth is 288K with emission of approximately 390Wm-2, the difference, 11K and 55Wm-2 could be considered energy flux from the surface that does not interact with the atmosphere.

This model provides some interesting potential. First, the cross over is at 0.6 instead of 0.609 the actual measured average emissivity of the Earth. The temperature of the cross over is 208K with a flux of 102Wm-2 approximately, indicating an imbalance of approximately 0.9 Wm-2, the modeled value of the energy imbalance use by Kiehl and Trenberth in their Earth energy budget.

By adjusting the the curves so that they intersect at 0.609, there is no energy imbalance. This required changing the tropopause temperature by 0.2 degrees K and changing the T and E offset value from 0.185 to 0.20, which is Planck parameter or Planck response made somewhat famous in the Monckton Lucia dust up.

To indicate what relatively small changes in the troposphere temperature can have on the Planck response and energy imbalance:

In the above chart the Tropopause temperature used is 190K

In this chart the tropopause temperature is shift to 173K or 100C.

In the last two charts a %I curve was added to illustrate the relative changes in thermal conductivity of the atmosphere with tropopause temperature.

Friday, February 10, 2012

Non-Linear Fun with the Ideal Gray Body

I add a new curve to the gray body, the % I for the percentage of conductive flux. It is a rough approximation, but close enough for this bit of fun. As you can see, the blue curve is the R-value based on a Troposphere temperature of 182K degrees (-91C) or 62Wm-2 at that point. I selected this because it is the rough minimum temperature, though some of the satellite data show 67Wm-2 as minimum.

This plot is with the Tropopause temperature dropped 100C degrees (272K) 51 Wm-2 which happens during deep convection. Notice that the blue R-value curve has shifted. If the T and E curves were adjusted to cross on the R value point, the TOA emissivity would increase to about 0.7 from the normal value.

This time the plot is based on a Tropopause temperature of 190K degrees (-83C)74Wm-2. Pretty neat huh? The TOA emissivity can vary from about 0.5 with rapid cooling to 0.7 with Tropopause punch through with deep convection. I need to double check my model set up, but so far it seems fairly close for illustration of the non-linear complexities involved in measuring the net flux at the Top of the Atmosphere.

So if you ever wonder why it is difficult to measure 0.9Wm-2 of imbalanced forcing, there ya go. It is a seriously difficult critter to get a rope on :)

More Ideal Gray Body Fiddling

As I mentioned in another post, there is an ideal black body, I think we need an ideal gray body.

The above chart is my idea of an ideal gray body. When the T-value is 0.5 or 50 percent of the surface energy is radiated to space, it is and ideal gray body. I am using T-value and E-value because there is some possible confusion. The E-value is the energy emitted space from the layer or surface and the T-value is the energy transmitted to the next layer. That chart also includes the R-value or the energy transmitted per unit temperature. The R-value is always greater than the E-value because of the conductive energy transfer between molecules in a fluid. The R-value does approach the E-value at extreme temperatures.

Since conductive transfer is unavoidable or we would have a black body, the point where the T-value, E-value and R-value intersect should the the realistic ideal value for a gray body.

In the chart above, the T and E values have been shifted by 0.185 to create the intersect with the R-value. Interestingly, the point of intersection is approximately 0.6 or very close to the TOA emissivity of the Earth. At the surface emission layer, we have the following values:

Flux 335 Temperature 277 R-value 0.349 T-value 0.185 E-value 0.9996

This should indicate that the "Effective" surface radiant layer is 277 degrees K at 335 Wm-2 with an R-value of 0.349 W/K. The T-value of 0.185 should be the Planck response which is not all that useful, but 1 - the Planck response or 1-0.185=0.815 would be the "Effective" emissivity of the surface radiant layer.

As the actual surface characteristics are approximately 288K @ 390 Wm-2, 390-335=55Wm-2 would not be interactive with the atmosphere. That could be the energy emitted through the atmospheric window, latent energy shift or a combination of the two.

The R-value, which is very close to the value determined using the modified Kimoto equation,117Wm-2 which would be the combined conductive flux, which would include some or all of the latent flux from the surface, With latent estimated at 80Wm-2, the conductive sensible value would be 117-80=37Wm-2.

While this is just a first attempt to use a ideal gray body model, it does compare well to observations of the Earth atmosphere. Hopefully, this will somewhat support the modified Kimoto equation which is a lot easier to use. :)

There are of course potential errors and adjustments required, but I will post this now so y'all can have a good laugh while I fine tune my model.

Oh, I nearly forgot, 335Wm-2 from the "effective" radiant layer looking up minus 117Wm-2 equals 218Wm-2, the approximate value of the true down welling long wave radiation :)

The above chart is my idea of an ideal gray body. When the T-value is 0.5 or 50 percent of the surface energy is radiated to space, it is and ideal gray body. I am using T-value and E-value because there is some possible confusion. The E-value is the energy emitted space from the layer or surface and the T-value is the energy transmitted to the next layer. That chart also includes the R-value or the energy transmitted per unit temperature. The R-value is always greater than the E-value because of the conductive energy transfer between molecules in a fluid. The R-value does approach the E-value at extreme temperatures.

Since conductive transfer is unavoidable or we would have a black body, the point where the T-value, E-value and R-value intersect should the the realistic ideal value for a gray body.

In the chart above, the T and E values have been shifted by 0.185 to create the intersect with the R-value. Interestingly, the point of intersection is approximately 0.6 or very close to the TOA emissivity of the Earth. At the surface emission layer, we have the following values:

Flux 335 Temperature 277 R-value 0.349 T-value 0.185 E-value 0.9996

This should indicate that the "Effective" surface radiant layer is 277 degrees K at 335 Wm-2 with an R-value of 0.349 W/K. The T-value of 0.185 should be the Planck response which is not all that useful, but 1 - the Planck response or 1-0.185=0.815 would be the "Effective" emissivity of the surface radiant layer.

As the actual surface characteristics are approximately 288K @ 390 Wm-2, 390-335=55Wm-2 would not be interactive with the atmosphere. That could be the energy emitted through the atmospheric window, latent energy shift or a combination of the two.

The R-value, which is very close to the value determined using the modified Kimoto equation,117Wm-2 which would be the combined conductive flux, which would include some or all of the latent flux from the surface, With latent estimated at 80Wm-2, the conductive sensible value would be 117-80=37Wm-2.

While this is just a first attempt to use a ideal gray body model, it does compare well to observations of the Earth atmosphere. Hopefully, this will somewhat support the modified Kimoto equation which is a lot easier to use. :)

There are of course potential errors and adjustments required, but I will post this now so y'all can have a good laugh while I fine tune my model.

Oh, I nearly forgot, 335Wm-2 from the "effective" radiant layer looking up minus 117Wm-2 equals 218Wm-2, the approximate value of the true down welling long wave radiation :)

Thursday, February 9, 2012

Models versus Observations

The latitudinal temperature change based of GISS data for 2011 using 1896 to 1906 baseline above is not perfect, but should give a reasonable idea of the climate change since 1900.

The above is the temperature change simulated by GISS based on constant sea surface temperature. As you can see it differs considerable in the tropics.

The chart for the RSS tropical data from 1979 indicates a warming of approximately 0.1 degrees per decade. The data from 1994 appears to indicate a shift to 0.04 degrees per decade. Both are considerably less than the simulated 0.26 degrees per decade.

With the apparent narrowing of the tropical belt associated with the PDO change to negative and the implied relationship of the tropical belt varying with longer term solar TSI changes, there is a likely possibility that the 1994 trend will remain the same or decrease over the next solar cycle.

Update: fixed some of the typos

Tuesday, February 7, 2012

The Ideal Gray Body?

Not being to figure out what the exact value of radiant forcing is in an atmosphere is ridiculous. I mean really! We have pretty good approximations of black bodies, why so much grief over a gray body, a body with a radiant active atmosphere? Sounds kind of stupid for so many smart guys working on simple problem.

Well, since we don't know, I am going to define an ideal gray body. An ideal gray body has a surface emissivity of 1, a perfect black body and an atmospheric emissivity of 0.5, exactly half of its radiant energy is convert to heat while leaving the surface. Cool huh?

So if the Earth was an ideal gray body, its emissivity would be 0.5. So looking from the surface, if the surface emission was 400Wm-2, then the average radiant layer would be 200Wm-2. Since the radiant energy has to cool above as much as it warms below, the TOA would 100Wm-2 plus half the energy from the average radiant layer, 100Wm-2 for the TOA measurable emission of 200Wm-2. Remember, half of the 200 is required to create the 100Wm-2, with half passing through. I went through this with the multi-disc model so I will add that link later.

So why this crazy brain storm? Well, the the TOA emissivity is about 0.61, the Earth is neither an ideal black nor gray body. Since it misses perfection by about 0.11 out of 0.5, about 22% of the energy transfer is not playing the ideal game. If the surface is 400Wm-2, the 200Wm-2 would be the ideal emission to space. If the TOA emission is 240Wm-2, the ideal surface emission would be 480Wm-2. 22% of these would be 44 or 52.8 depending on which is the better baseline value.

Those both make sense. The 44Wm-2 is approximately the surface to space energy through the atmospheric window and the 52.8 is approximately the cloud level to space energy through the atmospheric window. So the Earth is about 78% gray body and 22% black body.

78% of the surface flux would be 312Wm-2, since perfect gray bodies emit 50% of their surface energy, 156Wm-2 would the apparent temperature of the ideal gray body Earth viewed from space. 156Wm-2 would also be the radiant portion of the greenhouse effect.

The surface is warmer than that though. More energy would have to be transferred to the atmosphere. For the 52.8Wm-2 lost through the atmospheric window, at least half, 26.4Wm^2, would need to be transferred to the atmosphere by....? I would think conduction.

Well, since we don't know, I am going to define an ideal gray body. An ideal gray body has a surface emissivity of 1, a perfect black body and an atmospheric emissivity of 0.5, exactly half of its radiant energy is convert to heat while leaving the surface. Cool huh?

So if the Earth was an ideal gray body, its emissivity would be 0.5. So looking from the surface, if the surface emission was 400Wm-2, then the average radiant layer would be 200Wm-2. Since the radiant energy has to cool above as much as it warms below, the TOA would 100Wm-2 plus half the energy from the average radiant layer, 100Wm-2 for the TOA measurable emission of 200Wm-2. Remember, half of the 200 is required to create the 100Wm-2, with half passing through. I went through this with the multi-disc model so I will add that link later.

So why this crazy brain storm? Well, the the TOA emissivity is about 0.61, the Earth is neither an ideal black nor gray body. Since it misses perfection by about 0.11 out of 0.5, about 22% of the energy transfer is not playing the ideal game. If the surface is 400Wm-2, the 200Wm-2 would be the ideal emission to space. If the TOA emission is 240Wm-2, the ideal surface emission would be 480Wm-2. 22% of these would be 44 or 52.8 depending on which is the better baseline value.

Those both make sense. The 44Wm-2 is approximately the surface to space energy through the atmospheric window and the 52.8 is approximately the cloud level to space energy through the atmospheric window. So the Earth is about 78% gray body and 22% black body.

78% of the surface flux would be 312Wm-2, since perfect gray bodies emit 50% of their surface energy, 156Wm-2 would the apparent temperature of the ideal gray body Earth viewed from space. 156Wm-2 would also be the radiant portion of the greenhouse effect.

The surface is warmer than that though. More energy would have to be transferred to the atmosphere. For the 52.8Wm-2 lost through the atmospheric window, at least half, 26.4Wm^2, would need to be transferred to the atmosphere by....? I would think conduction.

Mid Latitudes and Radiant Impact

The above charts are thanks to the Forecast at University of Chicago website and its MODTRAN program. This time we are looking at the mid latitudes with the first chart at 100ppm, it also shows the basic stuff, particularly the 4km looking up. The second chart is the same but with 400PPM CO2 and the third is with 800PPM. I selected 4 kilometers as an estimated average radiant layer and will assume the tropopause can provide a sink temperature of -89C. The initial surface temperature is zero C, so the atmospheric R-value would be 89 degrees/250.4Wm-2=0.355 and the emissivity of the tropopause would be 0.207 unitless.

For the 400PPM chart, Iout is 168.5 versus the initial 160.1 at 100PPM. That implies an 8.4Wm-2 increase at the radiant layer so a double or 16.8Wm-2 impact at the surface. That should increase the surface temperature by 3.6 degrees and change the R-value to 0.0.333 and the emissivity to 0.1961and for the final 800PPM, Iout increase to 172.95Wm-2 of an increase of 12.85 from 100PPM producing 25.7Wm-2 increase at the surface, for a T of 5.4C, R-value of 0.322 and E-value of 0.191 still unitless.

The initial R-value was 0.355@ 250.4Wm-2. When the temperature increases by 5.4C the R-value would flux to increase by 5.4/0.355=15.2 instead of the 25.7 indicated by the radiant change. That would result in a surface temperature increase of 2.18C instead of 5.4C or 40% of the estimate based solely on radiant forcing.

That happens to agree rather well with the observed change, but does not mean that is the solution of the CO2 forcing issue. Every variable in a dynamic system has a maximum impact that is not sustainable. This only indicates a range of relative radiant and conductive combined impact, from 2.18C to 5.4C for a double doubling. The approximate glacial would be the first doubling (190 is almost 200), we are now at the second doubling (390 is almost 400) and the 800PPM would be the next doubling.

I will attempt to fine tune this a little, but global impact is not all that meaningful, regional should be more informative.

Monday, February 6, 2012

Antarctic Uncertainty

The Antarctic has stubbornly remained at about the same average temperature for at least the last 50 years despite the increase in atmospheric CO2. Some people might entertain the idea that warnings of polar amplification of the Greenhouse Effect are nonsense. That may be the case, but since stability is not a common feature of nonlinear dynamic systems, it is an interesting place to study.

Thanks to MODTRAN and the University of Chicago we can play with the radiant spectrum to ponder what might be going on down there. The three chart above are for no clouds, subarctic winter atmosphere, -49.5C surface temperature offset with the sensor located at 2 kilometers looking up with the CO2 concentration from top down, 280ppm, 375ppm and 560ppm. On the plots is the MODTRAN estimate of the energy out at each concentration, increasing as the concentration increases. Doubling the CO2 from 280ppm to 560ppm should increase the outgoing flux at 2 kilometers by 1.2Wm-2.

Since I selected -49.5C (223.7K @ about 142Wm-2) for the surface temperature, based on my interpretation, the radiant impact on the surface would be about twice the MODTRAN estimate. That would increase the surface flux to 144.4Wm-2 for a new surface temperature of -48.5C or one degree for a doubling. Today, at 375ppm, yes that is about what it is in the Antarctic, the temperature increase would be about 0.5C degrees. Because of the difficulty in determining an average Antarctic temperature, that may be the case, but is somewhat unlikely.

Doubling the CO2 concentration to 1120ppm from 560ppm, MODTRAN estimates the energy out at 2km to be 67.7Wm-2. Using the same doubled impact at the surface, the temperature would increase to -47.7C which would be 1.8 degrees increase from 280ppm to 1120ppm or a four times increase in CO2 concentration.

Carbon dioxide also changes the thermal properties of the atmosphere, the thermal conductivity and the thermal capacity. The change in the conductivity alone for a doubling of CO2 is about 90 milliWatts, 24/7/365, which over an extended period would start adding up to real energy. That would not explain why the Antarctic is not warming though. I would have implications during a prolonged solar minimum, but not just yet.

Another way of determining the impact of the change in CO2 is consider the change in the Atmospheric R value of the Antarctic with respect to the change in possible CO2 forcing. The R-value, which I have covered before, is just a rule of thumb method of quantifying the thermal diffusion or heat loss. Using the -49C @ 280ppm as a baseline and the -89C estimated limit of the tropopause, The baseline R-value for the Antarctic is, 0.278W/K that would compare to a baseline -49C to -89C emissivity of 0.458 that I will assume is unitless, since it is the ratio of Wm^-2 out(@184K)/Wm^-2surface. At 560ppm, the R-value, using the MODTRAN estimate, is 0.280 versus the estimated emissivity of 0.450 again unitless. The change in R-value is about half the change in Emissivity resulting in a predict surface temperature change for a doubling in the Antarctic of 0.42 degrees versus the Emissivity change estimated at 1.0 degrees. Less than half the estimated impact which seems to jibe pretty well with observations. For the current 375ppm, the estimate impact using the R-values is 0.07 degrees versus the estimated GHE impact of 0.50 degrees.

While this in no way conclusive, the R-value method using the -89C tropopause limit appears to produce reasonable results. Neither method provides a definite value. The MODTRAN appears to be an upper limit and the R-value a lower limit. Considering how difficult the problem is, I consider this progress.

UPDATE:

I forgot to mention why the R-value based estimate is roughly half of the Emissivity based estimate. It is the resilience of the tropopause as a heat sink.

Since the Tropopause appears to have a temperature limit, it and the Antarctic are very stable. The plot above, while hard to see, shows why the two estimates vary so much. Since the Tropopause tries to maintain a relatively stable temperature, it does not warm at the same rate as the surface. In fact, the additional CO2 tends to cool above the average radiant layer as it tends to warm the surface. So instead of an initial increase in the lapse rate, there is an elbow that developes in the lapse rate as the atmosphere below the average radiant layer attempts to approach an isothermal characteristic. You will note on the plot above that at 2240PPM, the elbow is just beginning to appear. Click on the image to make it bigger.

With this blow up, you can see the elbow that should begin in the range of 1120PPM. So more temperature inversions should be expected in the Antarctic as CO2 levels rise.

BTW, It seems that screen captures with paint produces much better quality charts than the stupid software that is supposed to do the job better :)

Thanks to MODTRAN and the University of Chicago we can play with the radiant spectrum to ponder what might be going on down there. The three chart above are for no clouds, subarctic winter atmosphere, -49.5C surface temperature offset with the sensor located at 2 kilometers looking up with the CO2 concentration from top down, 280ppm, 375ppm and 560ppm. On the plots is the MODTRAN estimate of the energy out at each concentration, increasing as the concentration increases. Doubling the CO2 from 280ppm to 560ppm should increase the outgoing flux at 2 kilometers by 1.2Wm-2.

Since I selected -49.5C (223.7K @ about 142Wm-2) for the surface temperature, based on my interpretation, the radiant impact on the surface would be about twice the MODTRAN estimate. That would increase the surface flux to 144.4Wm-2 for a new surface temperature of -48.5C or one degree for a doubling. Today, at 375ppm, yes that is about what it is in the Antarctic, the temperature increase would be about 0.5C degrees. Because of the difficulty in determining an average Antarctic temperature, that may be the case, but is somewhat unlikely.

Doubling the CO2 concentration to 1120ppm from 560ppm, MODTRAN estimates the energy out at 2km to be 67.7Wm-2. Using the same doubled impact at the surface, the temperature would increase to -47.7C which would be 1.8 degrees increase from 280ppm to 1120ppm or a four times increase in CO2 concentration.

Carbon dioxide also changes the thermal properties of the atmosphere, the thermal conductivity and the thermal capacity. The change in the conductivity alone for a doubling of CO2 is about 90 milliWatts, 24/7/365, which over an extended period would start adding up to real energy. That would not explain why the Antarctic is not warming though. I would have implications during a prolonged solar minimum, but not just yet.

Another way of determining the impact of the change in CO2 is consider the change in the Atmospheric R value of the Antarctic with respect to the change in possible CO2 forcing. The R-value, which I have covered before, is just a rule of thumb method of quantifying the thermal diffusion or heat loss. Using the -49C @ 280ppm as a baseline and the -89C estimated limit of the tropopause, The baseline R-value for the Antarctic is, 0.278W/K that would compare to a baseline -49C to -89C emissivity of 0.458 that I will assume is unitless, since it is the ratio of Wm^-2 out(@184K)/Wm^-2surface. At 560ppm, the R-value, using the MODTRAN estimate, is 0.280 versus the estimated emissivity of 0.450 again unitless. The change in R-value is about half the change in Emissivity resulting in a predict surface temperature change for a doubling in the Antarctic of 0.42 degrees versus the Emissivity change estimated at 1.0 degrees. Less than half the estimated impact which seems to jibe pretty well with observations. For the current 375ppm, the estimate impact using the R-values is 0.07 degrees versus the estimated GHE impact of 0.50 degrees.

While this in no way conclusive, the R-value method using the -89C tropopause limit appears to produce reasonable results. Neither method provides a definite value. The MODTRAN appears to be an upper limit and the R-value a lower limit. Considering how difficult the problem is, I consider this progress.

UPDATE:

I forgot to mention why the R-value based estimate is roughly half of the Emissivity based estimate. It is the resilience of the tropopause as a heat sink.

Since the Tropopause appears to have a temperature limit, it and the Antarctic are very stable. The plot above, while hard to see, shows why the two estimates vary so much. Since the Tropopause tries to maintain a relatively stable temperature, it does not warm at the same rate as the surface. In fact, the additional CO2 tends to cool above the average radiant layer as it tends to warm the surface. So instead of an initial increase in the lapse rate, there is an elbow that developes in the lapse rate as the atmosphere below the average radiant layer attempts to approach an isothermal characteristic. You will note on the plot above that at 2240PPM, the elbow is just beginning to appear. Click on the image to make it bigger.

With this blow up, you can see the elbow that should begin in the range of 1120PPM. So more temperature inversions should be expected in the Antarctic as CO2 levels rise.

BTW, It seems that screen captures with paint produces much better quality charts than the stupid software that is supposed to do the job better :)

Sunday, February 5, 2012

Arrhenius is Still Dead but his Mistake Lives on

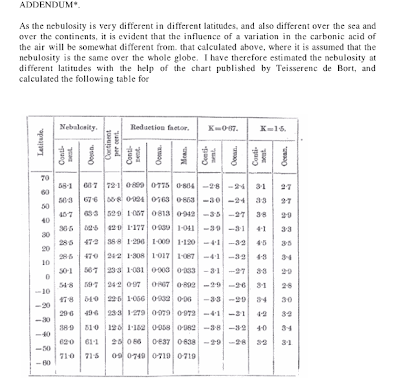

The image about is the final chart from the 1896 Paper by Svante Arrhenius titled, On the Influence of Carbonic Acid in the Air on the Temperature of the Ground. I have challenged a number of people to review the paper before making claims based on what is contained in the paper. No one bothers actually checking the content of the papers they quote, that is too much like work I suppose.

Svante had a few successes as a scientist and a few failures. That is part of the science business, sometimes you get it right, some times you inspire others to get it right, by being wrong. In the table Svante predicted the future. We happen to live in what is his future, so we can check to see how he did. In the last two columns there is a K=0.67 and a K=1.5. They are respectively, 67% of the CO2 concentration of his day and 150% of the CO2 concentration of his day. In his day, CO2 in the air was at a concentration of around 280 parts per million. One hundred and fifty percent of 280PPM is 420PPM. Today, the concentration is about 390PPM so we are very close to his predicted future concentration. One would think that his predicted temperatures would be pretty close to what we have today.

The chart above is from the NASA GISS which shows the temperature change by latitude as of last year, 2011, compared to the baseline period, 1896 to 1906, Arrhenius' time. How does that compare to Arrhenius' prediction? Not that well in my opinion.

Nebulosity, what Arrhenius made to chart above to consider, is clouds. I seem to remember that clouds were a source of uncertainty in climate science. What is minimum nebulosity? Lack of clouds, which Arrhenius assumed would cause the greatest water vapor feed back for increasing CO2 in the air.

So where are we? We have a 100 plus year old estimate and 100 plus years of data, limited of course, but the best we have. So based on the 100 plus year old formula, what is the climate's sensitivity to CO2?

Hey, I coulda screwed up, why not check it yourself?

We know a lot more now than Arrhenius did or do we? The climate scientists still maintain that water vapor feed back can be 2 to 3 times as potent as CO2 in the air. That does not appear to be the case, at least based on the Carbonic acid in the air influence on the temperature of the ground relationship developed by Svante Arrhenius.

Wednesday, February 1, 2012

Trying to Piece Together Russian Agriculture in Siberia

Thanks to revolts, revolutions and world wars, the history of Russian agriculture in the Siberian region is a puzzle. So this is just a deposit of what I happen upon.

Wikipedia, not a great but easy resource, mentions that over 10 million Russians migrated to the Siberian region following the completion of the trans-Siberian railroad. On average, each was given 16.5 hectares to move into the region. 16.5 million hectares is 0.165 million kilometers squared. This migration took place between 1890 and 1910. This impact does not show up in the Taymyr reconstruction as an increase in optimum growing conditions. There is a small increase in a generally downward trend, but nothing that stands out as significant.

http://data.giss.nasa.gov/cgi-bin/gistemp/do_nmap.py?year_last=2011&month_last=12&sat=4&sst=1&type=anoms&mean_gen=12&year1=1880&year2=2011&base1=1900&base2=1930&radius=1200&pol=reg

There are some interesting points though. While the whole series does not match, the blip does have some back up with local temperatures around Omsk. Also, the Russians were producing a great deal of wheat inefficiently by world standards to pay off debt. With the new Siberian railroad, they was probably a good deal of timber harvested for export as well. I will need to check other regional commodities reports for export information.

Unfortunately, the Taymyr tree ring reconstruction stopped in 1970 because, according to Jacoby, they stopped responding to temperature. Well duh! So I need to find the source of the raw data to extend the reconstruction to at least 2000, to see if the 1994 climate shift may show in the tree rings.

That is hard to read, so I will have to break it down or find a better program than paint to convert the chart. Anyway, the stratospheric shift is easy to see, both the mid troposphere and lower troposphere also shifted in a similar ratio. Not too exciting, but I think it is a much longer period shift that I may be able to match with the Taymyr and CET. Pretty iffy, but interesting.

Wikipedia, not a great but easy resource, mentions that over 10 million Russians migrated to the Siberian region following the completion of the trans-Siberian railroad. On average, each was given 16.5 hectares to move into the region. 16.5 million hectares is 0.165 million kilometers squared. This migration took place between 1890 and 1910. This impact does not show up in the Taymyr reconstruction as an increase in optimum growing conditions. There is a small increase in a generally downward trend, but nothing that stands out as significant.

http://data.giss.nasa.gov/cgi-bin/gistemp/do_nmap.py?year_last=2011&month_last=12&sat=4&sst=1&type=anoms&mean_gen=12&year1=1880&year2=2011&base1=1900&base2=1930&radius=1200&pol=reg

There are some interesting points though. While the whole series does not match, the blip does have some back up with local temperatures around Omsk. Also, the Russians were producing a great deal of wheat inefficiently by world standards to pay off debt. With the new Siberian railroad, they was probably a good deal of timber harvested for export as well. I will need to check other regional commodities reports for export information.

Unfortunately, the Taymyr tree ring reconstruction stopped in 1970 because, according to Jacoby, they stopped responding to temperature. Well duh! So I need to find the source of the raw data to extend the reconstruction to at least 2000, to see if the 1994 climate shift may show in the tree rings.

That is hard to read, so I will have to break it down or find a better program than paint to convert the chart. Anyway, the stratospheric shift is easy to see, both the mid troposphere and lower troposphere also shifted in a similar ratio. Not too exciting, but I think it is a much longer period shift that I may be able to match with the Taymyr and CET. Pretty iffy, but interesting.

Fruit Cocktail

Now that I have a new computer and no cat in the house to help program spreadsheets, I have started playing with a thing that has bothered me for a while, how much information may there be in tree rings?

They have favorite temperature, precipitation and nutrient ranges and they don't care for pests, fires and competition. So, temperature plays an important role, but how important?

Thirty percent of growth is based on temperature I have heard. I don't know, I would suspect that is just a ballpark, but I plotted the Taymyr tree ring reconstruction along with the Central England Temperature record. That would be apples and oranges I would imagine, so the Fruit Cocktail title.

Left click to make readable

I don't like to over smooth, in this case, I used 17 year moving averages of both the Taymyr and the CET. Why 17 years? Because that is the new 15 years, of course! Then I averaged the moving averages, probably a mistake, but I wanted to see where the apples and the oranges might be comparable. Then I added a linear regression to the average of averages. The R^2 value for that regression is 0.27, so there is about a 27% probability that it means something and about a 70% probability that this was a total waste of time.

There are sections that the R^2 value would be much higher, mainly up slopes with a few down slopes and the correlation gets better as time progresses. That could be caused by a number of factors that are not temperature related. Since the best correlation begins in the neighborhood of 1814, I am leaning towards agriculture and population growth.

So I have a puzzle that may be a waste of time, what better to do than waste someone else's time with the puzzle? There ya go, you just may have wasted some time too :)

They have favorite temperature, precipitation and nutrient ranges and they don't care for pests, fires and competition. So, temperature plays an important role, but how important?

Thirty percent of growth is based on temperature I have heard. I don't know, I would suspect that is just a ballpark, but I plotted the Taymyr tree ring reconstruction along with the Central England Temperature record. That would be apples and oranges I would imagine, so the Fruit Cocktail title.

Left click to make readable

I don't like to over smooth, in this case, I used 17 year moving averages of both the Taymyr and the CET. Why 17 years? Because that is the new 15 years, of course! Then I averaged the moving averages, probably a mistake, but I wanted to see where the apples and the oranges might be comparable. Then I added a linear regression to the average of averages. The R^2 value for that regression is 0.27, so there is about a 27% probability that it means something and about a 70% probability that this was a total waste of time.

There are sections that the R^2 value would be much higher, mainly up slopes with a few down slopes and the correlation gets better as time progresses. That could be caused by a number of factors that are not temperature related. Since the best correlation begins in the neighborhood of 1814, I am leaning towards agriculture and population growth.

So I have a puzzle that may be a waste of time, what better to do than waste someone else's time with the puzzle? There ya go, you just may have wasted some time too :)

Subscribe to:

Comments (Atom)