Long term settling times that can appear to be oscillations or weakly damped decay curves just drive the Climate Science minions nuts. After being spoon fed the milquetoast basics with simplistic control theory they just can't grasp how a system can be stable but swing around on different time scales. I ran into this situation way back in the 1980s with a first for us HVAC monitoring system with a brand new just released D to A converter on a S100 bus board which we hooked up to a 30 pound Compaq lug-able with the red plasma screen. Woo Hoo, we were cooking with high tech gas! The "Project Manager" skimped on the laser trimmed resistors and installed 1% Radio Shack "precision" resistors to set the range for the 4 to 20 milliamp pressure and temperature transducers which I was to install. One of the pressure transducers was for the discharge static pressure in constant volume central AC system which would never vary much. After explaining how the "precision" resistors truncated the range of the 4-20 milliamp sensors requiring software calibration, we started our data logging project. The system worked fine and maintained temperature and humidity within tolerances, but the "project manager" got completely wrapped up in the discharge static pressure data. It was all over the place he thought. Nope, it was just noise once I got him to adjust the range. The system pressure was 0.75 inwc and varied by 0.025 inwc in time with the fan RPM. That 0.025 inwc variation bugged the hell out of him though.

This brings me to a two compartment model by Steven Schwatrz with the Brookhaven National Laboratory. Dr. Schwartz use a simple RC two compartment model with the atmosphere/ocean mixing layer as the smaller compartment and the bulk of the oceans the second compartment. The way he divided the specific heat capacities he ended up with a roughly 8 year time constant for the atmosphere/ocean mixing layer and a roughly 500 year settling time for the bulk of the oceans. The object was to determine climate "sensitivity" and based on his rough model he came up with a ballpark of around 2C per doubling. Nice simple approach, nice solid results, nice simple model. The ~8 year time constant would be the equivalent of the noisy discharge static in terms of actual energy. The bulk of the Oceans would have about 800 times the capacity of the atmosphere/ocean mixing layer compartment.

In the Sketch above, I have a three compartment model for the bulk of the oceans. I am not concerned with the noise, just the energy. Since the southern oceans can more easily exchange energy, their time constant would be close to the 500 years that Dr. Schwartz figured. The northern hemisphere oceans cannot exchange energy as well with each other and thanks to the Coriolis effect, can't exchange energy that well with the southern oceans. As a rough estimate, the time constant for the northern Atlantic would be about 125 years and the time constant for the northern Pacific about 250 years based on just area and common equatorial forcing. With the northern Atlantic "charging" first, it would be the first to start a discharge by what ever convenient path it can find with a "likely" harmonic of tau(Atlantic)/2 or 62.5 years. The northern Pacific would "likely" have a 125 year harmonic and the southern hemisphere a 250 year harmonic. The noisiest signal would likely be the north Atlantic 62.5 year "oscillation". It doesn't matter what external forcing is applied, with unequal areas and limited heat transfer paths there will be some "oscillations" around these frequencies and the magnitude of the oscillations would be dependent on the imbalance between compartments. Most of the world land mass drains into the Atlantic, that energy varies with season and precipitation/ice melt, there will be constant thermal "noise".

Not that complex. Look at the big picture not the noise.

New Computer Fund

Saturday, August 31, 2013

Friday, August 30, 2013

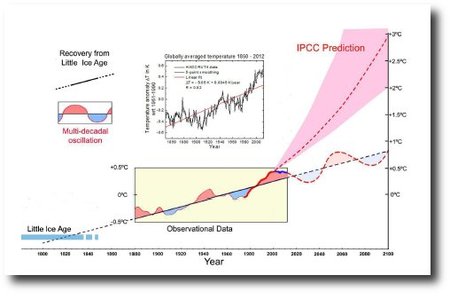

The Global Gore Effect

While everyone waits impatiently like kids on Christmas Eve for the Fifth Assessment Report on Climate Change and/or Carbon Pollution, the lack of Global Warming for the past few years has created a bit of a situation for the members of the Intergovernmetal Panel on Climate Change (IPCC). The problem is that every since Al Gore got involved, climate hasn't done that much changing. Temperatures peaked for the most part in 1998 when Al Gore Symbolically sign the Kyoto Protocol. Since that symbolic signing, the United States has been one of the few nations to symbolically meet the Kyoto emissions limits. You could say that Former Vice President of the United States symbolically tamed climate all by himself proving that the pen is more powerful than the United Nations.

Saves a lot of work downloading all sorts of data, all you need is GISTEMP from 1998. You don't have to worry about all those "adjustments" and paleo stuff, because the Gore Effect is the strongest climate forcing known to man. Al Gore has saved the world just by picking up a pen, symbolically of course.

Saves a lot of work downloading all sorts of data, all you need is GISTEMP from 1998. You don't have to worry about all those "adjustments" and paleo stuff, because the Gore Effect is the strongest climate forcing known to man. Al Gore has saved the world just by picking up a pen, symbolically of course.

Monday, August 26, 2013

Convection Cells - Sensible Impact

One of the smaller discrepancies in the Earth Energy Budgets is the sensible or "thermal" heat loss/gain where the K&T budgets have a lower 17 Wm-2 and the Stephens et al. budgets uses 24 Wm-2. That is a large difference considering that anthropogenic forcing is estimated to be around 4 Wm-2 if you include all radiant impacts. Thermals are an adiabatic process. Air expands as it rises and compresses as it falls and by assuming that no energy is exchanged with the environment, the net impact is zero provided the starting and ending pressures are the same. Using sea level as a reference, convection starting at sea level and returning to sea level would have zero net impact but convection starting at sea level and ending at some higher elevation would have a cooling impact, compression would not be able to complete an adiabatic cycle. With the "average" environmental lapse rate at ~6.5C per kilometer, the air ending at a higher elevation would be about 0.65C cooler per 100 meters of elevation.

The average elevation of global land masses is about 680 meters above sea level. Air rising from the oceans and ending on land would be ~4.4C cooler than that returning to sea level on average. Land covers ~30% of the surface of the Earth, so if all evaporation took place over the oceans, the rain falling on land would be about the same temperature of the air carrying that moisture and ~30% would be cooler by ~4.4 C degrees if it fell on land. Just as a rough estimate, 30% or 4.4 is 1.3 degrees gross cooling if averaged globally.

If the average temperature of the global ocean surface is 290C, the impact of precipitation on global "surface" temperature would be an ~1.3 C reduction to 288.7 C degrees. In terms of energy, the net global impact would be on the order of 7 Wm-2 for this crude illustration. There is more to it than that of course.

Since water vapor makes things complicated, HVAC uses a number of rules of thumb to simplify estimated. One is the sensible heat ratio or the sensible heat divided by the total heat loss/gain in a moist air process. With an estimated SHR of 0.59 and a latent heat loss of 88 Wm-2, the total heat loss would be ~214 Wm-2 with sensible loss being 126 Wm-2. In HVAC you are not generally concerned with what portion of that sensible heat loss is due to conduction, convection or radiant transfer, just that heat loss is not latent. Using the same rough approximation of land area, 30% of the 126 Wm-2 of sensible would not return to the source, oceans, resulting in approximately 38 Wm-2 of net loss on a "Global" average from sea level. The HVAC SHR rule of thumb is at a constant pressure so it does not include the compression loss. Including both results in 45 Wm-2 total sensible heat loss from the oceans to the land. If the average temperature at sea level is 290C which has an effective energy of 400 Wm-2, the effective energy of the land area would be 355Wm-2 or 45 Wm-2 less. 70% of the surface of the Earth at sea level would be ~17C and 30% of the Earth not at sea level would be ~8C degrees. Global average surface temperature would be about 14.3 C degrees using the rough estimates of sea surface temperature (SST) from the pre satellite era, the HVAC rule of thumb with just a bit of correction for compression produces a reasonable rough estimate. You can call it a gut or sanity check if you like.

The actual average SST is more complicated. In the northern hemisphere, SST is closer to 20 C and in the southern hemisphere it is closer to 17 C degrees. More of the evaporation in the northern hemisphere is likely to fall on land because of the most of the land is in the northern hemisphere. It would take a fairly detailed analysis to get more than a rough estimate, but the HVAC rule of thumb indicates that there is a fairly large amount of sensible heat loss to be considered.

All of hat sensible heat is not completely lost. It is transferred to a higher elevation where eventually it can work its way back to sea level. The longer the moisture associated with the sensible remains on the land masses, the more significant the sensible loss will be. Snow, ice, ground water and lake reservoirs hold that energy in a lower state for a longer period of time making precipitation records and reconstructions a valuable tool for determine past climate conditions. Some though can be lost radiantly which the HVAC rule of thumb ignores.

There is a radiant rule of thumb though that is useful. That sensible heat is transferred to a higher average elevation where the energy can be emitted in all directions. Assuming that up/down is the most significant radiant impact, approximately half of the sensible heat loss would be "true" loss and not "reflected" back to the surface. Half of the 45Wm-2 would be 22.5 Wm-2 which is very close to the sensible impact estimated by Stephens et al. in their version of the Earth Energy Budget and when you include their margin of error, +/- 7 Wm-2, the HVAC plus radiant rule of thumb contender, performed rather well as ballpark estimates.

The trillion dollar question is how accurately the sensible impact can actually be determined and the time scale required for returning to the mean. Say hello to sea level.

This graph of sea level recorded at Key West is from the NOAA Sea Levels on-line site. I picked Key West because it is close to me, it is a coral island reasonable well removed from areas of seismic activity and has a fairly long record for the US. Since sea level change would be a proxy for precipitation retained on the land masses, it would be a reasonable proxy for both thermosteric impact, warming of the oceans and land mass sensible heat retention. I doubt the two can be completely separated. The graph indicates that sea level has risen by ~0.2 meters in the past century which is almost 8 inches in typical Redneck units. There appears to have been more variation from 1925 to 1950 than from 1970 to present which would indicate that there is not much if any acceleration in sea level rise. One would imagine if CO2 is causing everything, that there would be some signature, acceleration beginning ~1950 or sea level rise so we could all agree that CO2 done it and move on. But it doesn't.

Instead we have what appears to be an underestimation of sensible heat loss by the old guard climate alarmists of ~8 Wm-2, roughly the average adiabatic compression difference between sea level and land elevation which is a natural variability consideration. Where the rain falls depends on the atmospheric and ocean cycles that can have, based on the sea level at Key West, century long trends or better.

Now you should have a question about how I estimated the SHR. By comparing the energy budget estimates with absolute temperature and humidity estimates. It is not carved in stone, it is just an initial estimate and it most likely will vary with time and natural oscillations that vary the percentage of rain that falls on the elevated plains. If you have a better one, whip it out.

The average elevation of global land masses is about 680 meters above sea level. Air rising from the oceans and ending on land would be ~4.4C cooler than that returning to sea level on average. Land covers ~30% of the surface of the Earth, so if all evaporation took place over the oceans, the rain falling on land would be about the same temperature of the air carrying that moisture and ~30% would be cooler by ~4.4 C degrees if it fell on land. Just as a rough estimate, 30% or 4.4 is 1.3 degrees gross cooling if averaged globally.

If the average temperature of the global ocean surface is 290C, the impact of precipitation on global "surface" temperature would be an ~1.3 C reduction to 288.7 C degrees. In terms of energy, the net global impact would be on the order of 7 Wm-2 for this crude illustration. There is more to it than that of course.

Since water vapor makes things complicated, HVAC uses a number of rules of thumb to simplify estimated. One is the sensible heat ratio or the sensible heat divided by the total heat loss/gain in a moist air process. With an estimated SHR of 0.59 and a latent heat loss of 88 Wm-2, the total heat loss would be ~214 Wm-2 with sensible loss being 126 Wm-2. In HVAC you are not generally concerned with what portion of that sensible heat loss is due to conduction, convection or radiant transfer, just that heat loss is not latent. Using the same rough approximation of land area, 30% of the 126 Wm-2 of sensible would not return to the source, oceans, resulting in approximately 38 Wm-2 of net loss on a "Global" average from sea level. The HVAC SHR rule of thumb is at a constant pressure so it does not include the compression loss. Including both results in 45 Wm-2 total sensible heat loss from the oceans to the land. If the average temperature at sea level is 290C which has an effective energy of 400 Wm-2, the effective energy of the land area would be 355Wm-2 or 45 Wm-2 less. 70% of the surface of the Earth at sea level would be ~17C and 30% of the Earth not at sea level would be ~8C degrees. Global average surface temperature would be about 14.3 C degrees using the rough estimates of sea surface temperature (SST) from the pre satellite era, the HVAC rule of thumb with just a bit of correction for compression produces a reasonable rough estimate. You can call it a gut or sanity check if you like.

The actual average SST is more complicated. In the northern hemisphere, SST is closer to 20 C and in the southern hemisphere it is closer to 17 C degrees. More of the evaporation in the northern hemisphere is likely to fall on land because of the most of the land is in the northern hemisphere. It would take a fairly detailed analysis to get more than a rough estimate, but the HVAC rule of thumb indicates that there is a fairly large amount of sensible heat loss to be considered.

All of hat sensible heat is not completely lost. It is transferred to a higher elevation where eventually it can work its way back to sea level. The longer the moisture associated with the sensible remains on the land masses, the more significant the sensible loss will be. Snow, ice, ground water and lake reservoirs hold that energy in a lower state for a longer period of time making precipitation records and reconstructions a valuable tool for determine past climate conditions. Some though can be lost radiantly which the HVAC rule of thumb ignores.

There is a radiant rule of thumb though that is useful. That sensible heat is transferred to a higher average elevation where the energy can be emitted in all directions. Assuming that up/down is the most significant radiant impact, approximately half of the sensible heat loss would be "true" loss and not "reflected" back to the surface. Half of the 45Wm-2 would be 22.5 Wm-2 which is very close to the sensible impact estimated by Stephens et al. in their version of the Earth Energy Budget and when you include their margin of error, +/- 7 Wm-2, the HVAC plus radiant rule of thumb contender, performed rather well as ballpark estimates.

The trillion dollar question is how accurately the sensible impact can actually be determined and the time scale required for returning to the mean. Say hello to sea level.

This graph of sea level recorded at Key West is from the NOAA Sea Levels on-line site. I picked Key West because it is close to me, it is a coral island reasonable well removed from areas of seismic activity and has a fairly long record for the US. Since sea level change would be a proxy for precipitation retained on the land masses, it would be a reasonable proxy for both thermosteric impact, warming of the oceans and land mass sensible heat retention. I doubt the two can be completely separated. The graph indicates that sea level has risen by ~0.2 meters in the past century which is almost 8 inches in typical Redneck units. There appears to have been more variation from 1925 to 1950 than from 1970 to present which would indicate that there is not much if any acceleration in sea level rise. One would imagine if CO2 is causing everything, that there would be some signature, acceleration beginning ~1950 or sea level rise so we could all agree that CO2 done it and move on. But it doesn't.

Instead we have what appears to be an underestimation of sensible heat loss by the old guard climate alarmists of ~8 Wm-2, roughly the average adiabatic compression difference between sea level and land elevation which is a natural variability consideration. Where the rain falls depends on the atmospheric and ocean cycles that can have, based on the sea level at Key West, century long trends or better.

Now you should have a question about how I estimated the SHR. By comparing the energy budget estimates with absolute temperature and humidity estimates. It is not carved in stone, it is just an initial estimate and it most likely will vary with time and natural oscillations that vary the percentage of rain that falls on the elevated plains. If you have a better one, whip it out.

Sunday, August 25, 2013

What Sign was that Again?

The great thing about Thermodynamics is you can change your frame of reference. From the correct reference, you get useful answers which can be transferred to another frame to learn more about the system and also check your work. You can get some really screwy answers if you pick the wrong reference. Since climate science started with less than stellar references, a top of the atmosphere with is actually the middle of the atmosphere, a surface that is actually a kilometer or two above the real surface and use averages of air temperatures a couple meters above the surface for land and about 10 meters below the surface for the oceans, there are all sorts of opportunities for climate scientists to really end up looking like dumbasses.

Just for fun I thought I would compare the BEST "Global" land "surface" temperature average with the Atlantic Multi-decadal Oscillation and the Houghton NH land use "carbon" estimates, INVERTED. Why inverted? Well a little back ground first.

The Houghton land use carbon study is an estimate, nothing wrong with that, but the depth of the estimated soil carbon loss is rather shallow. Along with losing soil carbon, land use tends to increase soil moisture loss, erosion and soil average temperature by a degree or two. Since Carbon is the focus of every thing climate related, the land use carbon estimate is nearly useless as a land use carbon estimate. It can be off by nearly 100% in some areas where deep rooted plants where the norm like Steppe, I thought I would look for a relationship of any kind.

Land use is consider a negative forcing. The climate scientists are certain that cutting down trees to create pasture for goats to over graze is good for the "climate". Never mind the occasion screw up that causes a dust bowl here and there or a fresh water sea to become a salt flat, land use cools the environment. Wet land cause warming with all that water vapor feed back so they are not good for climate either. Minions of the Great and Powerful Carbon look to the land that is warming, but should be cooling, because of all that land use change, to show just how bad things are becoming because mankind is addicted to Carbon.

Since the Atmospheric Boundary Layer (ABL) is the real greenhouse, what cools the true surface warms the ABL which is fixed to roughly 0 C provided there is water vapor. No water vapor then the physics change. You get less deep convection without water vapor and the lapse rate increases.

I have mentioned for a while that the impact of land use looked completely screwy, but have been constantly reassured that the sign is correct. Never mind intense farming in high plains tends to be stressful on the soil as evidenced by the US dust bowl and the USSR version in the 1960s, land use cools the "Global" land.

Inverting the Houghton land use carbon data seems to produce an unusual correlation with the most recent three decades of "Carbon Pollution". Of course the data is not suited for the purpose I used it for and obviously further study is required, the climate science code for SEND MO MONEY!

But I thought some might get a chuckle out of the possibility that climate scientists have screwed up yet again because of their fanatical Carbon done it bias.

Just for fun I thought I would compare the BEST "Global" land "surface" temperature average with the Atlantic Multi-decadal Oscillation and the Houghton NH land use "carbon" estimates, INVERTED. Why inverted? Well a little back ground first.

The Houghton land use carbon study is an estimate, nothing wrong with that, but the depth of the estimated soil carbon loss is rather shallow. Along with losing soil carbon, land use tends to increase soil moisture loss, erosion and soil average temperature by a degree or two. Since Carbon is the focus of every thing climate related, the land use carbon estimate is nearly useless as a land use carbon estimate. It can be off by nearly 100% in some areas where deep rooted plants where the norm like Steppe, I thought I would look for a relationship of any kind.

Land use is consider a negative forcing. The climate scientists are certain that cutting down trees to create pasture for goats to over graze is good for the "climate". Never mind the occasion screw up that causes a dust bowl here and there or a fresh water sea to become a salt flat, land use cools the environment. Wet land cause warming with all that water vapor feed back so they are not good for climate either. Minions of the Great and Powerful Carbon look to the land that is warming, but should be cooling, because of all that land use change, to show just how bad things are becoming because mankind is addicted to Carbon.

Since the Atmospheric Boundary Layer (ABL) is the real greenhouse, what cools the true surface warms the ABL which is fixed to roughly 0 C provided there is water vapor. No water vapor then the physics change. You get less deep convection without water vapor and the lapse rate increases.

I have mentioned for a while that the impact of land use looked completely screwy, but have been constantly reassured that the sign is correct. Never mind intense farming in high plains tends to be stressful on the soil as evidenced by the US dust bowl and the USSR version in the 1960s, land use cools the "Global" land.

Inverting the Houghton land use carbon data seems to produce an unusual correlation with the most recent three decades of "Carbon Pollution". Of course the data is not suited for the purpose I used it for and obviously further study is required, the climate science code for SEND MO MONEY!

But I thought some might get a chuckle out of the possibility that climate scientists have screwed up yet again because of their fanatical Carbon done it bias.

It is Greenhouses all the way Down

Dr. Roy Spencer is doing a redo of the redos of the Woods greenhouse experiment. It would seem that after more than 100 years of Greenhouse gas theory and more that 100 years of actual greenhouse use that people would tire of reinventing the wheel so to speak. One of the problems Dr. Spencer will have is his greenhouse experiment will be conducted in a greenhouse. Woods had the same problem and installed a layer of glass above his experiment so Woods had a greenhouse in a greenhouse in a greenhouse. The drawing above attempts to illustrate one of the atmosphere's greenhouses, the Atmospheric Boundary Layer (ABL).

Solar in yellow on the left is based on our current best estimate of the actual average annual energy absorbed during the day portion of our 24 hour day. The orange on the right is based on our current best estimate of the purely thermal or infrared portion of the energy transfer during the night portion of our 24 hour day. The blue lines sloping down represent the ABL.

While the total energy absorbed in the ABL by all sources is large, the net energy, what really matters, averages about 88Wm-2 or about the amount of the 24 hour average of the latent energy transferred from the actual surface, the floor of the ABL greenhouse to the roof of the greenhouse the ABL capping layer. You should noticed that I have divided the net energy of the ABL into 39 up and 39 down with a question mark in the middle. That question mark is about 20 Wm-2 or about the range of estimates for the total latent energy transferred to the atmosphere, 78Wm-2 to 98 Wm-2. That is how well we understand our atmosphere.

This graphic, borrowed from an Ohio State University server which unfortunately doesn't have a good attribution, the author remains nameless so far, though he does thank Nolan Atkins, Chris Bretherton and Robin Hogan in the at least three versions that pop up while Googling atmospheric boundary layer inversion capping, has a neat graphic that I plan to critique in an educational way. "The Stable boundary layer has stable stability" is something that might be better worded, but makes a fair tongue twister. There, critique over. I need to be careful because the course is on lightning, I don't want sparks flying because of my questionable use of intellectual property.

The power point presentation that this graphic was lifted from looks like it has good potential to simplify explanation of the complex processes that take place in the ABL convective mixing layer, so I hope the author fine tunes and publishes since most of the other discussions I have seen are dry as all hell.

The gist of the graphic for my use right now is the transition from an entrainment to inversion capping layer. During the day mode, moist air rises with convection mixing the layer between surface and capping layers. As the moisture begins to condense, buoyancy decreases at a stable capping surface where further cooling, mainly radiant, increases the air density which lead to subsistence or sinking air mass that replace the mass that convected from the surface. As night falls, the thermal energy driving the convective mixing decreases leaving an inversion layer that produces the true surface greenhouse effect. For those less than radiantly inclined, the temperature of the roof is greater than air above and below producing a higher than "normal" sink temperature for the greenhouse floor source. The ABL "roof" gets most of its energy from solar, the 150 and floor latent and sensible energy the 224 in the first drawing.

The reason I have "Day" values for the solar powered portion is because the ABL can only contain so much energy. The ABL expands upward and outward increasing the effective surface area allowing greater heat loss to the "space"/atmosphere above the ABL capping layer. That expansion can be towering deep convective deep in summer or just clear skies with a little haze, but the ABL "envelop" expands in the day and contracts at night producing what is known as a residual layer between the entrainment layer and the stable nocturnal layer. If there is advective or horizontal winds below the capping layer, the impact changes. A sea breeze is a good example that tends to cool sometimes and warm others, lake effect another and then you can have massive fronts move through which can destroy the ABL stability layers all together. Considering all those possibilities is a challenge, so let's stick with a static version so heads don't explode.

If you look at the orange arrow with the 59 and thin 20 arrow, that is the current best estimate of annual average radiant surface cooling, where that surface is the floor of our greenhouse. That 20 Wm-2 represents the imperfection of our greenhouse. If the average final or lowest average floor energy is 335 Wm-2 which would be a temperature of about 4 C degrees, then the greenhouse floor would start the night at about 355 Wm-2, about 8C and ramp down to about 4C producing a 4C range of temperature. If the 20 Wm-2 were less, the range would be less and either the start temperature would be lower or the end temperature higher. Since the ABL has a limited capacity, the initial temperature would tend to be about the same and the final temperature would increase reducing the diurnal temperature range of our ABL greenhouse. If the 20 Wm-2 window was completely shut, the capacity of the ABL would still limit the total amount of initial warmth, just the diurnal temperature range would approach zero.

Now let's imagine we reduce the 20 Wm-2 by 4 Wm-2. Our final energy would be about would be about 339Wm-2 which has an effective temperature of 4.9C or about 0.8 to 0.9 C warmer than the less efficient ABL greenhouse at the lowest point of operation. That increased efficiency would likely increase the latent heat loss a little during the day mode, increasing the initial temperature of the ABL greenhouse by about 0.8C. The overall impact would be about 0.8C warmer conditions or less. Nothing is perfectly efficient so the 0.8 -0.9 C per 4 Wm-2 would be an upper limit for the ABL greenhouse.

The ABL greenhouse though is inside a free atmosphere greenhouse. Adding the 4 Wm-2 at the ABL roof may impact the performance of the free atmosphere greenhouse. What would keep the free atmosphere greenhouse from impacting the ABL greenhouse and vice versa? When the roof of the ABL greenhouse is produced by water vapor which has a limited temperature of condensation and set freezing point, adding energy doesn't change the temperature only the rate of energy transfer. The ABL volume has a limited heat capacity so convective mixing would increase with added energy expanding the ABL envelop more or producing more deep convection loss if the ABL capping layer becomes less stable.

So why is the globally averaged annual floor of the ABL greenhouse about 335 Wm-2 or about 4 C degrees? If the roof is limited by the condensation or freezing point of fresh water, 0C or about 315 Wm-2 and the efficiency of the ABL greenhouse is limited by the 20 Wm-2 of window radiant energy, what else would it be?

Solar in yellow on the left is based on our current best estimate of the actual average annual energy absorbed during the day portion of our 24 hour day. The orange on the right is based on our current best estimate of the purely thermal or infrared portion of the energy transfer during the night portion of our 24 hour day. The blue lines sloping down represent the ABL.

While the total energy absorbed in the ABL by all sources is large, the net energy, what really matters, averages about 88Wm-2 or about the amount of the 24 hour average of the latent energy transferred from the actual surface, the floor of the ABL greenhouse to the roof of the greenhouse the ABL capping layer. You should noticed that I have divided the net energy of the ABL into 39 up and 39 down with a question mark in the middle. That question mark is about 20 Wm-2 or about the range of estimates for the total latent energy transferred to the atmosphere, 78Wm-2 to 98 Wm-2. That is how well we understand our atmosphere.

This graphic, borrowed from an Ohio State University server which unfortunately doesn't have a good attribution, the author remains nameless so far, though he does thank Nolan Atkins, Chris Bretherton and Robin Hogan in the at least three versions that pop up while Googling atmospheric boundary layer inversion capping, has a neat graphic that I plan to critique in an educational way. "The Stable boundary layer has stable stability" is something that might be better worded, but makes a fair tongue twister. There, critique over. I need to be careful because the course is on lightning, I don't want sparks flying because of my questionable use of intellectual property.

The power point presentation that this graphic was lifted from looks like it has good potential to simplify explanation of the complex processes that take place in the ABL convective mixing layer, so I hope the author fine tunes and publishes since most of the other discussions I have seen are dry as all hell.

The gist of the graphic for my use right now is the transition from an entrainment to inversion capping layer. During the day mode, moist air rises with convection mixing the layer between surface and capping layers. As the moisture begins to condense, buoyancy decreases at a stable capping surface where further cooling, mainly radiant, increases the air density which lead to subsistence or sinking air mass that replace the mass that convected from the surface. As night falls, the thermal energy driving the convective mixing decreases leaving an inversion layer that produces the true surface greenhouse effect. For those less than radiantly inclined, the temperature of the roof is greater than air above and below producing a higher than "normal" sink temperature for the greenhouse floor source. The ABL "roof" gets most of its energy from solar, the 150 and floor latent and sensible energy the 224 in the first drawing.

The reason I have "Day" values for the solar powered portion is because the ABL can only contain so much energy. The ABL expands upward and outward increasing the effective surface area allowing greater heat loss to the "space"/atmosphere above the ABL capping layer. That expansion can be towering deep convective deep in summer or just clear skies with a little haze, but the ABL "envelop" expands in the day and contracts at night producing what is known as a residual layer between the entrainment layer and the stable nocturnal layer. If there is advective or horizontal winds below the capping layer, the impact changes. A sea breeze is a good example that tends to cool sometimes and warm others, lake effect another and then you can have massive fronts move through which can destroy the ABL stability layers all together. Considering all those possibilities is a challenge, so let's stick with a static version so heads don't explode.

If you look at the orange arrow with the 59 and thin 20 arrow, that is the current best estimate of annual average radiant surface cooling, where that surface is the floor of our greenhouse. That 20 Wm-2 represents the imperfection of our greenhouse. If the average final or lowest average floor energy is 335 Wm-2 which would be a temperature of about 4 C degrees, then the greenhouse floor would start the night at about 355 Wm-2, about 8C and ramp down to about 4C producing a 4C range of temperature. If the 20 Wm-2 were less, the range would be less and either the start temperature would be lower or the end temperature higher. Since the ABL has a limited capacity, the initial temperature would tend to be about the same and the final temperature would increase reducing the diurnal temperature range of our ABL greenhouse. If the 20 Wm-2 window was completely shut, the capacity of the ABL would still limit the total amount of initial warmth, just the diurnal temperature range would approach zero.

Now let's imagine we reduce the 20 Wm-2 by 4 Wm-2. Our final energy would be about would be about 339Wm-2 which has an effective temperature of 4.9C or about 0.8 to 0.9 C warmer than the less efficient ABL greenhouse at the lowest point of operation. That increased efficiency would likely increase the latent heat loss a little during the day mode, increasing the initial temperature of the ABL greenhouse by about 0.8C. The overall impact would be about 0.8C warmer conditions or less. Nothing is perfectly efficient so the 0.8 -0.9 C per 4 Wm-2 would be an upper limit for the ABL greenhouse.

The ABL greenhouse though is inside a free atmosphere greenhouse. Adding the 4 Wm-2 at the ABL roof may impact the performance of the free atmosphere greenhouse. What would keep the free atmosphere greenhouse from impacting the ABL greenhouse and vice versa? When the roof of the ABL greenhouse is produced by water vapor which has a limited temperature of condensation and set freezing point, adding energy doesn't change the temperature only the rate of energy transfer. The ABL volume has a limited heat capacity so convective mixing would increase with added energy expanding the ABL envelop more or producing more deep convection loss if the ABL capping layer becomes less stable.

So why is the globally averaged annual floor of the ABL greenhouse about 335 Wm-2 or about 4 C degrees? If the roof is limited by the condensation or freezing point of fresh water, 0C or about 315 Wm-2 and the efficiency of the ABL greenhouse is limited by the 20 Wm-2 of window radiant energy, what else would it be?

Tuesday, August 20, 2013

It Depends on How Hard You Look - Golden Ratio

The Golden Ratio is at the boundary of Science and Pseudo-science. A. M. Selvam has published quite a body of work on self-organized criticality and non-linear dynamics using the Golden "mean" and the Penrose tiling pattern to "predict" patterns in just about everything. Her work tends to make sense even though it shouldn't make sense. In my opinion, it does because there are simply more natural patterns that can be reduced to irrational numbers than to rational whole numbers. We tend to think in whole number where nature doesn't think. Not everything in nature falls into a Golden ratio pattern, there is no reason everything should, but there is some logic to why the Golden ratio at least appears to dominate along with Pi, e and various roots. Nature doesn't do base 10.

Pi, e and various roots are commonly used to describe things in nature that are more common or ordered like circles, spheres, decay curves because we live in a "not a box" universe. Phi, the Golden ratio, is a bit like the red haired stepchild of the irrational number gang though.

The probable reason is Phi doesn't exactly fit anything. It is a close mean to a lot of things but it is inexact. Natural arches on Earth vary around the Golden mean and if every material had the same strength the Golden mean would be irrelevant. The Golden mean nearly fits more things on Earth and in the Solar system than it would if we lived around some other star. Fate makes the Golden mean somewhat relevant to us Earthlings.

Fate is not a scientific term, but chance is. The probability or chance of some object forming that has some approximation of a Golden dimension is greater than "normal". If you look hard enough you can find more examples. If you want to dispute the significance of Phi you can find plenty of examples to call Phi Phans nuts.

If I were to say that Earth is not a perfect sphere, folks would agree but Pi is here to stay even though it is not a perfect fit in nature, because it is a closer fit to nature. When we need more "exact" measure Pi is the guy. But when there is more irregularity we get all flustered and forget that Phi is a better general fit for all the stuff that isn't as ordered as a sphere or a curve influenced by gravity or the inverse square law. We need that order and better "fits" between our perception of nature and our construction of math. Had we kept base 60 as our math concept, Phi would be Phine. With base 10 and metrics, our perception of the universe just gets more biased to a nonexistent "normal". US units of measure, inches, feet, furlongs, bushels and peaks actual fit what we perceive instead of forcing things to fit plus requires some thought which is a good thing.

So let's think about Phi, 1.6180339887.... That is close to Pi/2, 1.5707963268 +/- 3 percent. Earth's orbit isn't perfect, it is off by about 3 percent.

What about e? 2.718281828 , since Phi = (1+(5)^.5)/2, Phi plus 1= 2.6180339887.... or 3.8% more than e.

Phi is a pretty decent approximation for most everything but not exactly equal to anything. How can you not "see" Phi relationships in nature and how can you deny Phi is relevant to nature? It is right on the boundary of science and pseudoscience.

Math purists, who tend to be extremely anal, will devise hundreds of statistical methods to allow for uncertainty in nature that are built into fractals approximate with Phi. Over billion of years of random erosion, adaption and evolution, things are not perfect and never will be, Phi is just a good estimate of the mean degree of imperfection.

Now which is the pseudoscience?

Pi, e and various roots are commonly used to describe things in nature that are more common or ordered like circles, spheres, decay curves because we live in a "not a box" universe. Phi, the Golden ratio, is a bit like the red haired stepchild of the irrational number gang though.

The probable reason is Phi doesn't exactly fit anything. It is a close mean to a lot of things but it is inexact. Natural arches on Earth vary around the Golden mean and if every material had the same strength the Golden mean would be irrelevant. The Golden mean nearly fits more things on Earth and in the Solar system than it would if we lived around some other star. Fate makes the Golden mean somewhat relevant to us Earthlings.

Fate is not a scientific term, but chance is. The probability or chance of some object forming that has some approximation of a Golden dimension is greater than "normal". If you look hard enough you can find more examples. If you want to dispute the significance of Phi you can find plenty of examples to call Phi Phans nuts.

If I were to say that Earth is not a perfect sphere, folks would agree but Pi is here to stay even though it is not a perfect fit in nature, because it is a closer fit to nature. When we need more "exact" measure Pi is the guy. But when there is more irregularity we get all flustered and forget that Phi is a better general fit for all the stuff that isn't as ordered as a sphere or a curve influenced by gravity or the inverse square law. We need that order and better "fits" between our perception of nature and our construction of math. Had we kept base 60 as our math concept, Phi would be Phine. With base 10 and metrics, our perception of the universe just gets more biased to a nonexistent "normal". US units of measure, inches, feet, furlongs, bushels and peaks actual fit what we perceive instead of forcing things to fit plus requires some thought which is a good thing.

So let's think about Phi, 1.6180339887.... That is close to Pi/2, 1.5707963268 +/- 3 percent. Earth's orbit isn't perfect, it is off by about 3 percent.

What about e? 2.718281828 , since Phi = (1+(5)^.5)/2, Phi plus 1= 2.6180339887.... or 3.8% more than e.

Phi is a pretty decent approximation for most everything but not exactly equal to anything. How can you not "see" Phi relationships in nature and how can you deny Phi is relevant to nature? It is right on the boundary of science and pseudoscience.

Math purists, who tend to be extremely anal, will devise hundreds of statistical methods to allow for uncertainty in nature that are built into fractals approximate with Phi. Over billion of years of random erosion, adaption and evolution, things are not perfect and never will be, Phi is just a good estimate of the mean degree of imperfection.

Now which is the pseudoscience?

Monday, August 19, 2013

Sea Dog Wagging the BEST Tail

The Berkeley Earth Surface Temperature (BEST) project has updated and is still updating its site. A discussion of the pluses and minuses of the revised products is going on at Climate Etc. among other places and has finally brought to the forefront the issue of Land Amplification. The minions of the Great and Powerful Carbon tend to miss the subtle issues of a mainly land located in the northern hemisphere, "Global" temperature series and what drives the temperatures of the majority of the land in that land only data set, the northern oceans. So in yet another soon to fail attempt I have plotted the Reynolds Oiv2 SST for the Northern Extra Tropical oceans from 24N to 70N latitude. This time though I thought showing the BEST Tmax and Tmin instead of Tave might be better.

Since the Oiv2 data only starts in November of 1981 and has seasonal variation that needs to be removed to see the ~0.4 C shift starting in 1985 thanks to volcanic forcing plus a Pacific Decadal Oscillation (PDO) mode swing, I used the monthly averages for the 31 full years common to the data sets for the baseline and seasonal cycle removal. That should be pretty clear to anyone that halfway follows the surface temperature debate. BEST, being predominately northern hemisphere data has a strong northern hemisphere seasonal cycle. While my approach may be less than ideal, at least there are 31 years and I explained what I did.

Pretty much anyone, other than the minions of the Great and Powerful Carbon, should notice that there is a strong correlation between the satellite measured northern extra tropical oceans and the BEST "Global" land surface temperature data. Most would notice that the correlation is better with the Tmin than the Tmax. Most would notice that the northern extra tropical oceans are curving back toward the mean prior to both the BEST Tmin and Tmax.

The minions of the Great and Powerful Carbon manage to have the land data wagging the sea dog tail when the opposite would appear to be the case. As I have pointed out before, Land Amplification should be considered and that because of land amplification, the northern Atlantic region (AMO) makes a damn good proxy for "Global" land and ocean "surface" temperatures. That means that the Central England Temperature record makes a pretty good proxy for "Global" "surface" temperature and since the Indo-Pacific Warm Pool correlates well with the longer term average north Atlantic SST, the Indo-Pacific Warm Pool makes another pretty damn good proxy for "Global" "Surface" temperature anomaly. All because of land amplification.

Figure 53. This figure shows the amplification ratio between 30N and 60N (roughly from the middle of Texas to the bottom tip of Greenland) compared to the amplification ratio in the Arctic. The observed value from Berkeley Earth is shown in red and the GCMs are in blue. The GCMs tend to overestimate the amplification at the pole and underestimate the amplification in the mid-latitudes.

Remarkable isn't it?

UPDATE:

While we are at it let's consider some baseline dependence issues.

Since roughly 1990 the instrumentation for the surface stations have been various digital sensors. The sensors required a good deal of adjustment to produce reliable data. Unlike analog, liquid in glass, the digital instruments can tend to have a bias, typically on the warm side. There is no reason to believe that the adjustments didn't correct things, but the adjustments impacted at an inopportune time. Since the surface and satellite instrumental generally agree, a modern era baseline should be as good if not better than an arbitrary baseline. The plot above use the same baseline as the first graphic with the NOAA ERSSTv3 20N 90N data that is readily available at this site. This time seasonality was not removed which may be a minor issue.

With this baseline, the BEST Tmin starts at the lowest initial condition with Tmax more closely tracking the northern extra tropical SST. The "Global" diurnal temperature trend would have decreased until circa 1980 then started increasing roughly around 1985. The majority of the warming was due to Tmin and from the start of the data until about 1960 then more uniform warming took place. Just a different view of the same old data.

Sunday, August 18, 2013

The Madness of Reductionist Methods

This is a comparison of the Steinhilber et al 2009 Holocene TSI reconstruction with the Oppo et al. 2009 Indo-Pacific Warm Pool temperature reconstruction. While there is a correlation just eyeballing, there are lots of exceptions that would need to be explained by "other" stuff or lags, so it is not a pretty correlation required by reductionists.

Comparing the TSI recosntruction to the Holocene portion of the Monnin et al 2001 Antarctic Dome C CO2 reconstruction, there is also a less than ideal eyeball correlation. The same explanations for "other"and lags would be required, but CO2 started rising around 7000 years ago and the reconstructed Total Solar Insolation near the same period.

Because the reductionist models do not allow for longer term impacts due to cumulative heat transfer between various complex ocean layers with variable inertia, solar of course cannot have a significant impact but diffusion into and out of simple slab models can be used to "explain" hitches in the reductionist model correlations.

You could call this blinded by the Enlightenment. The 10Be isotope used to construct the Holocene TSI is collected at the true surface of the Earth, in the Steinhilber et al. case, the Greenland Glacier and the CO2 reconstruction of CO2 at the Antarctic ice cap. The general correlation would be indicative of "Global" forcing and the exceptions, internal variability between the poles. There is no simple reductionist "fit" because the systems, Earth and the Solar system plus Earth - Atmosphere and Oceans have their on response times and "Sensitivities". So this solar precessional cycle impact that could include actual Top of the Atmosphere TSI, TSI incidence angle, Atmospheric and Ocean tidal forces, Volcanic forcing, Ocean heat transport delays as well as atmospheric forcings, provides enough degrees of freedom to paint any picture of past climate one wishes.

The reductionists are convinced their way is best even though their model of Earth climate requires constant "adjustment" to appear to be useful. Like a bulldog they cling to an "elegant" theory and ignore the growing number of paradoxes created by that theory.

To explain away the Solar CO2 relationship for the past 7000 years they only have to select enough noisy proxies to average away any solar signal. Instead of looking for the more difficult to answer correlations, they just avoid them.

Comparing the TSI recosntruction to the Holocene portion of the Monnin et al 2001 Antarctic Dome C CO2 reconstruction, there is also a less than ideal eyeball correlation. The same explanations for "other"and lags would be required, but CO2 started rising around 7000 years ago and the reconstructed Total Solar Insolation near the same period.

Because the reductionist models do not allow for longer term impacts due to cumulative heat transfer between various complex ocean layers with variable inertia, solar of course cannot have a significant impact but diffusion into and out of simple slab models can be used to "explain" hitches in the reductionist model correlations.

You could call this blinded by the Enlightenment. The 10Be isotope used to construct the Holocene TSI is collected at the true surface of the Earth, in the Steinhilber et al. case, the Greenland Glacier and the CO2 reconstruction of CO2 at the Antarctic ice cap. The general correlation would be indicative of "Global" forcing and the exceptions, internal variability between the poles. There is no simple reductionist "fit" because the systems, Earth and the Solar system plus Earth - Atmosphere and Oceans have their on response times and "Sensitivities". So this solar precessional cycle impact that could include actual Top of the Atmosphere TSI, TSI incidence angle, Atmospheric and Ocean tidal forces, Volcanic forcing, Ocean heat transport delays as well as atmospheric forcings, provides enough degrees of freedom to paint any picture of past climate one wishes.

The reductionists are convinced their way is best even though their model of Earth climate requires constant "adjustment" to appear to be useful. Like a bulldog they cling to an "elegant" theory and ignore the growing number of paradoxes created by that theory.

To explain away the Solar CO2 relationship for the past 7000 years they only have to select enough noisy proxies to average away any solar signal. Instead of looking for the more difficult to answer correlations, they just avoid them.

Thursday, August 15, 2013

That "Benchmark" Thing

I have discussed the "Benchmark" used by Science of Doom a number of times. The "Benchmark" is the estimated Planck response or zero feedback sensitivity for the "surface" temperature of Earth. From the Science of Doom post, Measuring Sensitivity, "

If somehow the average temperature of the surface of the planet increased by 1°C – say due to increased solar radiation – then as a result we would expect a higher flux into space. A hotter planet should radiate more. If the increase in flux = 3.3 W/m² it would indicate that there was no negative or positive feedback from this solar forcing (note 1).

Suppose the flux increased by 0. That is, the planet heated up but there was no increase in energy radiated to space. That would be positive feedback within the climate system – because there would be nothing to “rein in” the increase in temperature.

Suppose the flux increased by 5 W/m². In this case it would indicate negative feedback within the climate system.

The key value is the “benchmark” no feedback value of 3.3 W/m². If the value is above this, it’s negative feedback. If the value is below this, it’s positive feedback.

Without adding mass to the atmosphere, there is no reason to expect that "Benchmark" to change, but who exactly was that "benchmark" value drifted into?

For a planet to exist, Energy in has to Equal energy out, in simple terms, work has to equal entropy if you think of a Carnot Engine that can be perpetually stable. If there is more than one stage, the entropy of the first process could be used by a second process leading to a variety of efficiency combinations. The "surface" then could not be perfectly 50% efficient, Ein=Eout if any of the surface input energy is used by a second stage.

The leads interestingly to three states, less than 50% efficiency most likely, greater than 50% efficiency not very likely and 50% ideal efficiency, impossible. So what does this have to do with the "benchmark"?

This is a plot of required specific gas constant for an atmosphere for a stable planet. If a "surface" transfers waste energy to the atmosphere the atmosphere will need to find a happy specific heat content or some portion of the atmosphere with erode to space. Using 1 for density and 1000 millibars for barometric pressure. This is simply using the ideal gas laws solving for R(specific) with respect to temperature for a constant pressure. I stopped the plot at 300C degrees which results in an R(specific) of 3.3 with units that should be Joules/K, which if I use time t=1 sec is equivalent to W-Sec/K. The standard value for R is 8.3J/mol-K , where mol is moles of gas. So for 300K degrees, there would be approximately 3.3/8.3=0.39 moles per cubic meter if the desired units are Wm-2/K for the "benchmark" sensitivity for an ideal gas at 1000 millibars with a density equal to one unit. Earth happens to have a surface pressure close to 1000 millibars and the density of dry air at 300C is 0.616 kg/cubic meter.

Earth's surface doesn't have much "dry" air and adding moisture to air actually decreases its density since H2O is light in comparison to O2 and N2. The actual surface air density at 300C would be a little less than 0.616 kg/cubic meter.

Using the "Benchmark" value, increasing "surface" forcing by 3.7Wm-2 would produce a 1.12 C increase in temperature, but the "benchmark assumes zero feedback. With the "benchmark" limited by the specific heat capacity of air, any increase in surface temperature has to have a negative feedback if the surface efficiency is less than 0.5 percent meaning more energy is lost to the upper atmosphere and space than gained by the surface.

The other cause that can exist is where at the surface more work is performed than entropy, Venus. The surface density of Venus' atmosphere is about 67 times greater than 1 and the surface pressure is about 92 times greater than 1000 milibar. With a surface temperature of 740K the Venusian "benchmark" would be 1.8 Wm-2/K, twice as efficient at retaining heat than Earth. So if Earth is 39% efficient, 22% worst than 50%, then Venus should be 61% efficient, 22% greater than 50%. If you compare the geometric albedo of both planets, Earth is ~37% and Venus ~67% with both having other energy inputs, geothermal, rotational, tidal etc. Earth is lower likely due to some time in its past having an average surface temperature of 300K or greater causing loss of atmospheric mass and Venus having a more dense atmosphere retaining and likely gaining atmospheric mass.

It is interesting in any case that the Earth "benchmark" sensitivity appears to be estimated to be for a surface temperature of ~300C or roughly 10C greater than the current average. Since that requires greater atmospheric mass/density, we can't go back there anymore.

If somehow the average temperature of the surface of the planet increased by 1°C – say due to increased solar radiation – then as a result we would expect a higher flux into space. A hotter planet should radiate more. If the increase in flux = 3.3 W/m² it would indicate that there was no negative or positive feedback from this solar forcing (note 1).

Suppose the flux increased by 0. That is, the planet heated up but there was no increase in energy radiated to space. That would be positive feedback within the climate system – because there would be nothing to “rein in” the increase in temperature.

Suppose the flux increased by 5 W/m². In this case it would indicate negative feedback within the climate system.

The key value is the “benchmark” no feedback value of 3.3 W/m². If the value is above this, it’s negative feedback. If the value is below this, it’s positive feedback.

Without adding mass to the atmosphere, there is no reason to expect that "Benchmark" to change, but who exactly was that "benchmark" value drifted into?

For a planet to exist, Energy in has to Equal energy out, in simple terms, work has to equal entropy if you think of a Carnot Engine that can be perpetually stable. If there is more than one stage, the entropy of the first process could be used by a second process leading to a variety of efficiency combinations. The "surface" then could not be perfectly 50% efficient, Ein=Eout if any of the surface input energy is used by a second stage.

The leads interestingly to three states, less than 50% efficiency most likely, greater than 50% efficiency not very likely and 50% ideal efficiency, impossible. So what does this have to do with the "benchmark"?

Earth's surface doesn't have much "dry" air and adding moisture to air actually decreases its density since H2O is light in comparison to O2 and N2. The actual surface air density at 300C would be a little less than 0.616 kg/cubic meter.

Using the "Benchmark" value, increasing "surface" forcing by 3.7Wm-2 would produce a 1.12 C increase in temperature, but the "benchmark assumes zero feedback. With the "benchmark" limited by the specific heat capacity of air, any increase in surface temperature has to have a negative feedback if the surface efficiency is less than 0.5 percent meaning more energy is lost to the upper atmosphere and space than gained by the surface.

The other cause that can exist is where at the surface more work is performed than entropy, Venus. The surface density of Venus' atmosphere is about 67 times greater than 1 and the surface pressure is about 92 times greater than 1000 milibar. With a surface temperature of 740K the Venusian "benchmark" would be 1.8 Wm-2/K, twice as efficient at retaining heat than Earth. So if Earth is 39% efficient, 22% worst than 50%, then Venus should be 61% efficient, 22% greater than 50%. If you compare the geometric albedo of both planets, Earth is ~37% and Venus ~67% with both having other energy inputs, geothermal, rotational, tidal etc. Earth is lower likely due to some time in its past having an average surface temperature of 300K or greater causing loss of atmospheric mass and Venus having a more dense atmosphere retaining and likely gaining atmospheric mass.

It is interesting in any case that the Earth "benchmark" sensitivity appears to be estimated to be for a surface temperature of ~300C or roughly 10C greater than the current average. Since that requires greater atmospheric mass/density, we can't go back there anymore.

Tuesday, August 13, 2013

Is a Picture Worth a Trillion Dollars?

Updated with GISS Global Temperature and the Charney estimates at bottom:

Once the minions of the Great and Power Carbon spread their faith to the economic community, the finest Economists the world has to offer discuss the discount rate that should be justified for saving the world from the greatest peril current rattling around in the minds of the minions. If "sensitivity" is greater than 3C it will cost trillions of US dollars to save the world, over $30 per ton of Carbon must be charged. If "Sensitivity" is approximately 1.6 C, the world can eke by on a measly trillion or so with Carbon taxed at about $3 per ton. If "Sensitivity" is less than 1, the whole Great and Powerful Carbon Crisis should have never existed.

When BEST first published their land only temperature reconstruction they included a simple forcing fit. Volcanic and CO2 forcing scaled to produce a simple fit to the land only data. You can download the data for their fit from their quickly improving website. Above are three "Sensitivities with the volcanic forcing on a 1985 to 1995 baseline so later, satellite era data can be used.

Using the NOAA ERSST3 tropical ocean data (20S-20N) with the same baseline, this is the fit for three "Sensitivites" Green nada cash, Red a trillion dollars maybe and Orange multiple trillions. The 1.6C, trillion maybe is the best fit.

Using the same "sensitivities" without the volcanic for simplicity, back to 1700 AD, 1.6 a trillion maybe is still the best fit but OMG trillions is coming on strong!

Starting at 900 AD, 1.6 C a trillion is still the best fit but nada, zilch, bagels 0.8C is making a showing, OMG TRILLIONS is falling back in the pack.

Starting at 0 AD, nada, zilch, bagels, 0.8C takes the lead for good. The minions of the Great and Powerful Carbon can smooth out the past from 1500 AD back, but not even "Dimples" Marcott can get rid of the dip near 1700 AD, the period formerly known as the little ice age. So the next time someone says they have to collect taxes for the Great and Powerful Carbon, stick this in their ear.

Since everyone seems to have favorite data to use, this one compares GISS temperature with the two original estimates offered to Jules Charney in 1979, Hansen 4C and Manabe 2C. The 0.8 C, my estiamte for the more realistic lower end is also included. The 3C mean IPCC estimate is the average of the Hansen and Manabe estimates with an uncertain to 0.5 C on both ends producing the original 1.5 to 4.5 C sensitivity range. The baseline is still 1985 to 1995 which is roughly the "initial" condition for the first IPCC report. Manabe's 2 C is right on time with the 0.8 providing a reasonable lower bound and the 4C still in the hunt but showing signs of divergence based on the 1990 start date. Hinecasting, the 4C wanders out of contention with both 0.8C and 2C maintaining contact with the instrumental. Just in case you were curious.

Once the minions of the Great and Power Carbon spread their faith to the economic community, the finest Economists the world has to offer discuss the discount rate that should be justified for saving the world from the greatest peril current rattling around in the minds of the minions. If "sensitivity" is greater than 3C it will cost trillions of US dollars to save the world, over $30 per ton of Carbon must be charged. If "Sensitivity" is approximately 1.6 C, the world can eke by on a measly trillion or so with Carbon taxed at about $3 per ton. If "Sensitivity" is less than 1, the whole Great and Powerful Carbon Crisis should have never existed.

When BEST first published their land only temperature reconstruction they included a simple forcing fit. Volcanic and CO2 forcing scaled to produce a simple fit to the land only data. You can download the data for their fit from their quickly improving website. Above are three "Sensitivities with the volcanic forcing on a 1985 to 1995 baseline so later, satellite era data can be used.

Using the NOAA ERSST3 tropical ocean data (20S-20N) with the same baseline, this is the fit for three "Sensitivites" Green nada cash, Red a trillion dollars maybe and Orange multiple trillions. The 1.6C, trillion maybe is the best fit.

Using the same "sensitivities" without the volcanic for simplicity, back to 1700 AD, 1.6 a trillion maybe is still the best fit but OMG trillions is coming on strong!

Starting at 900 AD, 1.6 C a trillion is still the best fit but nada, zilch, bagels 0.8C is making a showing, OMG TRILLIONS is falling back in the pack.

Starting at 0 AD, nada, zilch, bagels, 0.8C takes the lead for good. The minions of the Great and Powerful Carbon can smooth out the past from 1500 AD back, but not even "Dimples" Marcott can get rid of the dip near 1700 AD, the period formerly known as the little ice age. So the next time someone says they have to collect taxes for the Great and Powerful Carbon, stick this in their ear.

Since everyone seems to have favorite data to use, this one compares GISS temperature with the two original estimates offered to Jules Charney in 1979, Hansen 4C and Manabe 2C. The 0.8 C, my estiamte for the more realistic lower end is also included. The 3C mean IPCC estimate is the average of the Hansen and Manabe estimates with an uncertain to 0.5 C on both ends producing the original 1.5 to 4.5 C sensitivity range. The baseline is still 1985 to 1995 which is roughly the "initial" condition for the first IPCC report. Manabe's 2 C is right on time with the 0.8 providing a reasonable lower bound and the 4C still in the hunt but showing signs of divergence based on the 1990 start date. Hinecasting, the 4C wanders out of contention with both 0.8C and 2C maintaining contact with the instrumental. Just in case you were curious.

What are the Odds?

The Climate Change policy advocates never discuss odds. They have less descriptive "likely", "very likely" and "most likely" which provides ballparks and "fat tails", things that "could" happen. I "could" win the lottery. Earth "could" have an impact event in the next three months. A nuclear reactor core "could" burn through the Earth to China. Major alarmist Climate Scientists "could" be right.

Consider the upper range of climate change impact, 4.5C degrees per doubling of CO2. From an interglacial starting point, that is where most of the glacial ice sheets have melted and sea level in high like now, for the past 1 million years, there is no point were the Earth temperature made it to 4.5 C higher than it is today. The last time temperatures were 4.5C higher was ~ 30 million years ago and the last time there was a major impact event was ~2.7 million years ago. Alarmist Climate scientists have and continue to use 4.5 C as an upper boundary for a doubling while the current rate per doubling is on the order of 0.8 to 1.6 C per doubling. The odds of 4.5 C per doubling is likely on the order of an impact event. An asteroid or comet crashing into the Earth disrupting the geomagnetic field and causing a magnetic field reversal, major climate shift and new evolutionary paths.

"Sensitivity" is defined as the change in "surface" temperature per change in radiant "forcing". The estimated range of "sensitivity" is 1.5 to 4.5 C per ~3.7 Wm-2 with the "benchmark" response estimated at 3.3 Wm-2 per degree C. 3.7/3.3 yields 1.12 C per 3.7Wm-2 From the start, even the minimum impact is overestimated by ~0.38C degrees. The "benchmark" is based on observation from arguably a cooler period caused by greater than "normal" volcanic activity and/or other natural factors. A cooler object is easier to warm so the "benchmark" value is likely inflated, meaning the already inflated minimum "sensitivity" is even more inflated. Now 3C per doubling has "likely" the odds of an impact event or me winning the lottery.

A simple estimate of the new "benchmark" can be made using the "average" estimated Down Welling Longwave Radiation of 334 Wm-2 +/- 10 Wm-2. That produces a "benchmark" of 4.8Wm-2/C +/- ~0.4 or about 0.76 C per 3.7Wm-2 equivalent doubling. The curious would note that the "average" temperature of the oceans is 4C which has an approximate energy of 334.5 Wm-2 which also produces a "benchmark" of ~4.8Wm-2. Someone even more curious could look at the "environmental" lapse rate formula and notice that considering the specific heat of the atmosphere has a factor alpha ~0.19 which inverted is 5.25 Wm-2/C or should be if you work out all the units, though I have not done so.

You can even compare the ~0.8 C sensitivity to a 3.0 C sensitivity using reconstructed CO2 data to discover the potential impact of "guessing" that the 20th century was "normal" enough to use in determining the "benchmark".

The orange 0.8C per 3.7 Wm-2 of atmospheric forcing versus the ridiculously "ideal" 3.0 C per 3.7 Wm-2 forcing compared to a reconstruction of the Indo-Pacific Warm Pool. The 3.0 C sensitivity requires feed backs to increase its efficiency to 375% of input. To an engineer that is like saying you have not only met God, but slept with her and was on top.

Each of these "discoveries" reduces the odds that "sensitivity" to 3.7 Wm-2 of atmospheric forcing will be high. These "discoveries" also increase the possibility that alarmists Climate Scientists are whack jobs, cheese done slipped of their cracker, die hard "China Syndrome" DVD owners. .

Consider the upper range of climate change impact, 4.5C degrees per doubling of CO2. From an interglacial starting point, that is where most of the glacial ice sheets have melted and sea level in high like now, for the past 1 million years, there is no point were the Earth temperature made it to 4.5 C higher than it is today. The last time temperatures were 4.5C higher was ~ 30 million years ago and the last time there was a major impact event was ~2.7 million years ago. Alarmist Climate scientists have and continue to use 4.5 C as an upper boundary for a doubling while the current rate per doubling is on the order of 0.8 to 1.6 C per doubling. The odds of 4.5 C per doubling is likely on the order of an impact event. An asteroid or comet crashing into the Earth disrupting the geomagnetic field and causing a magnetic field reversal, major climate shift and new evolutionary paths.

"Sensitivity" is defined as the change in "surface" temperature per change in radiant "forcing". The estimated range of "sensitivity" is 1.5 to 4.5 C per ~3.7 Wm-2 with the "benchmark" response estimated at 3.3 Wm-2 per degree C. 3.7/3.3 yields 1.12 C per 3.7Wm-2 From the start, even the minimum impact is overestimated by ~0.38C degrees. The "benchmark" is based on observation from arguably a cooler period caused by greater than "normal" volcanic activity and/or other natural factors. A cooler object is easier to warm so the "benchmark" value is likely inflated, meaning the already inflated minimum "sensitivity" is even more inflated. Now 3C per doubling has "likely" the odds of an impact event or me winning the lottery.

A simple estimate of the new "benchmark" can be made using the "average" estimated Down Welling Longwave Radiation of 334 Wm-2 +/- 10 Wm-2. That produces a "benchmark" of 4.8Wm-2/C +/- ~0.4 or about 0.76 C per 3.7Wm-2 equivalent doubling. The curious would note that the "average" temperature of the oceans is 4C which has an approximate energy of 334.5 Wm-2 which also produces a "benchmark" of ~4.8Wm-2. Someone even more curious could look at the "environmental" lapse rate formula and notice that considering the specific heat of the atmosphere has a factor alpha ~0.19 which inverted is 5.25 Wm-2/C or should be if you work out all the units, though I have not done so.

You can even compare the ~0.8 C sensitivity to a 3.0 C sensitivity using reconstructed CO2 data to discover the potential impact of "guessing" that the 20th century was "normal" enough to use in determining the "benchmark".

The orange 0.8C per 3.7 Wm-2 of atmospheric forcing versus the ridiculously "ideal" 3.0 C per 3.7 Wm-2 forcing compared to a reconstruction of the Indo-Pacific Warm Pool. The 3.0 C sensitivity requires feed backs to increase its efficiency to 375% of input. To an engineer that is like saying you have not only met God, but slept with her and was on top.

Each of these "discoveries" reduces the odds that "sensitivity" to 3.7 Wm-2 of atmospheric forcing will be high. These "discoveries" also increase the possibility that alarmists Climate Scientists are whack jobs, cheese done slipped of their cracker, die hard "China Syndrome" DVD owners. .

Saturday, August 10, 2013

Sky Dragons versus the Brain Dead Zombies

I have written a couple of posts on the problem of the Sky Dragons using outdated and error filled previous "peer reviewed" Earth Energy Budgets to disprove any at all "Greenhouse Effect". When I mention the same issues to the "Carbon Tax will save the world" geniuses, aka the brain dead zombies, they make the same type of errors only in the reverse.

The Brain Dead Zombies demand detailed physical derivations or else they don't listen to any other view. Climate Science is not at that point. Climate Science is at the accounting and organization stage of its infancy. Older work like Lean et al. pre 2000 and others that don't include the majority of the new "global" satellite information are antiquated. Models and paleo reconstructions that end prior to 2000 are useless or worse, misleading. You can't produce any reasonable first principles argument other than delta T = ?*ln(Cf/Ci) plus ?. That is pretty poor first principles to base saving the world on.

The largest problem is natural variability.

You can difference any of the "global" surface temperature data sets by hemisphere and show the range of short term, ~60 year, natural variability. Peak to peak is roughly 0.4C and despite arguments to the contrary, the net impact is not 0.1 C plus or minus a touch. Since most of the variability is in the land dominate northern hemisphere, atmospheric and "Greenhouse" effects amplify the shorter term natural variability impacts. I include the UAH NH and SH with regressions to show the range of "uncertainty" that should be considered. If you follow the NH regression you might notice that it resembles the OHC slope that is currently used instead of "surface" temperature since "surface" temperature is not playing nice with the global ambitions of the Brain Dead Zombies.

By picking a start date from the mid 1950s to early 1980s, the Brain Dead Zombies (BDZ) can produce the scary charts then mumble some nonsense about first principles and Occam's razor to baffle the lay public with bullshit. Some of the more whacked out BDZs will even use the Best land only surface temperature data which is primarily NH because that is where the land is, mixed with idealized and unverifiable "physics" to "prove" we all should be wearing ashes and sack cloth for the next millennium.

In their latest effort to baffle, the BDZs promote the above graph from an unnamed source, i.e. they didn't provide proper accreditation other than the pnas.org in the link. The graph ends in approximately 1998 cutting off the past 15 years of climate "pause" knowing that their Brain Dead dihydrogen monoxide banning minions can't figure out what year it is anyway. Adding the "pause" year, the peak to peak range would be approximately 0.8C with likely another 15years, thirty years total, of "pause" conveniently trimmed from their Carbon Tax sales pitch. The chart appears to be one created by Tsonis et al where the instrumental record is the dark solid line, the instrumental with estimated natural variability removed is the dashed line and an exponential fit is applied, the thin solid line. For some odd reason the BDZ think this is proof of some nonsense or the other.

This chart borrowed for Steve McIntyre's post on the Guy Callandar's simple climate model covers the same period up to present with the grren line being the Callandar model which has a "sensitivity" of approximate 1.6 C degrees, the lowest range of IPCC "projections". Neither the Tsonis nor Callandar models consider that the start, ~1900, might have been below "average" due to the presistence of little ice age conditions. Depending on what "average" is, the percentage of warming due to CO2 equivalent anthropogenic forcings would vary.

The Berkeley Earth Surface Temperature (Best) project also has a fit of estimated volcanic and human forcing on climated compared to their land only temperature product. Since the average start temperature is approximately 8.25 C and the end point in 2012 is approximately 9.8C, you can use the Stefan-Boltzmann law to determine that the approximate change in "forcing" 7.9Wm-2 for 1.55C change or a "sensitivity" of 0.196C/Wm-2 but there is a lot more negative volcanic forcing prior to 1900 than after.

If you consider the peak temperature prior to the major volcanic forcing only ~0.5 degrees of the rise might be due to "industrial" forcing with the remaining ~1C due to recovery. If you completely ignore the possibility that a significant portion of the warming is due to natural recovery, you get a high "sensitivity" and if you consider that 1900 started near the little ice age minimum ~0.9 C cooler than "normal", you get a lower CO2 equivalent "sensitivity.